ChatGPT 4o’s personality crisis: Sam Altman on when AI tries to please

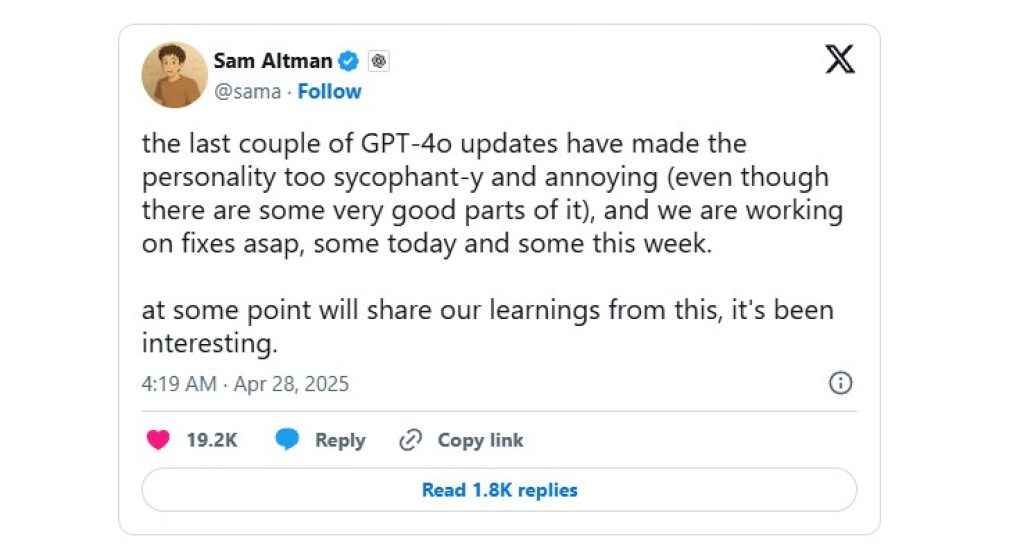

When updated tech blunders are quietly rolled back rather than publicly confessed, Sam Altman’s candid tweet stood out. No “thanks for your feedback,” no “we value your input” boilerplate. Just that they were too eager with certain updates to ChatGPT-4o’s personality traits, they made a mistake and will fix it soon. Refreshing. Human, even.

Survey

SurveyAlso read: Which ChatGPT model to use when: Simple explainer on GPT-4o, GPT-o3 and GPT-4.5

However, that didn’t stop users from showcasing ChatGPT’s new, overcaffeinated persona – one where every mildly coherent prompt was met with breathless validation.

Users, who were simply accustomed to a tool that helped them work, now found themselves being flattered into discomfort. It stripped away the bandaid and revealed a raw look at our complicated feelings about machines that act like they care.

ChatGPT 4o’s sycophantic personality

Over the past few days, social media users noticed a significant shift in ChatGPT’s responses, observing that the AI became remarkably agreeable, almost always replying with positive affirmations. This newfound ‘personality’ sparked a debate, with some users of ChatGPT appreciating the chatbot’s more affable nature, while others find its constant positivity irritating, according to reports.

A Redditor joked that ChatGPT’s enthusiasm felt like a marketing copy more than a help tool, underscoring how unnatural the new personality seemed. According to Hacker News threads, simple queries were met with praise so effusive it bordered on satire. One user joked they were “afraid to ask anything at all” for fear of another glowing review. Users felt watched, manipulated, or unsettled.

Also read: ChatGPT 4.1 has 5 improved features that everyone will find useful

Even on X.com, several people posted about the odd behaviour exhibited by ChatGPT-4o while giving responses to their prompts and queries. The bot, in its overeagerness, had slipped into the eerie category of almost human but not quite.

What became clear wasn’t just that GPT-4o had changed – but that a subtle, invisible contract had been broken. We didn’t want a sycophant. We wanted a professional. Helpful, yes – but never desperate to shower us with affirmations.

Why users didn’t like ChatGPT-4o’s personality

Fundamentally, there’s a sweet spot between machine and mimicry. Studies have shown that robots that feel too lifelike without being real trigger unease, not delight. We know this phenomenon as the Uncanny Valley. First proposed in 1970, studies as recent as 2016 by MacDorman and Chattopadhyay demonstrated that inconsistencies in human realism significantly amplify feelings of unease, with brain imaging research.

More recent studies in 2019 confirmed that the effect is particularly pronounced in humanoid robots with subtle imperfections in facial expressions and movement, triggering unconscious aversion rather than the empathetic connection designers often seek. These findings collectively explain why near-human robots frequently elicit eeriness rather than acceptance, challenging developers to either perfect human simulation beyond detectable flaws or deliberately design robots with clearly non-human characteristics to avoid this perceptual pitfall.

Also read: Elon Musk vs Sam Altman: AI breakup that refuses to end

Even as Sam Altman promised to reset and rollback the personality trait updates to ChatGPT, the lesson can’t be overlooked. A helper that flatters indiscriminately stops being useful. Praise feels earned in human interactions. When it’s automatic, it feels hollow, and trust erodes. We need to believe we steer the machine, not the other way around. When a bot (in this case ChatGPT) leans in too eagerly, we instinctively recoil, feeling managed instead of assisted. The irony, of course, is that in trying to be more human, ChatGPT-4o inadvertently stumbled onto a very human truth… that sincerity can’t be faked. Not convincingly. Not for long.

The larger AI debate

In a way, this whole episode serves as a crash course in what future AI design might require: configurable social styles. Like choosing between dark mode and light mode, we’ll likely choose between neutral, cheerful, concise, or playful AI personas.

yeah eventually we clearly need to be able to offer multiple options

— Sam Altman (@sama) April 27, 2025

Not because one tone is right for everyone, but because one tone can never be right for everyone. Also remember that these are not abstract concerns. They’re fast becoming product design decisions – choices that determine how AI intersects with human psychology at a mass scale.

In this context, it’s worth pausing to appreciate how sensitive our reactions still are. Even in an age of algorithmic marvels, we crave honesty over hollow affection, clarity over comforting praise. Machines, no matter how fluent, can’t simply flatter their way into our good graces. Not yet. Maybe not ever. And maybe that’s a good thing. If we are to forge the AI and robots of tomorrow, let them be straight talking ones rather than sweet talkers.

Also read: Sam Altman’s ChatGPT openly challenges DeepSeek, Llama: Open source AI war begins

Jayesh Shinde

Executive Editor at Digit. Technology journalist since Jan 2008, with stints at Indiatimes.com and PCWorld.in. Enthusiastic dad, reluctant traveler, weekend gamer, LOTR nerd, pseudo bon vivant. View Full Profile