Object Classification Using CNN Across Intel Architecture

Abstract

Survey

SurveyIn this work, we present the computational performance and classification accuracy for object classification using the VGG16 network on Intel® Xeon® processors and Intel® Xeon Phi™ processors. The results can be used as criteria for iteration selection optimization in different experimental setups using these processors and also in multinode architecture. With an objective of evaluating accuracy for real-time logo detection from video, the results are applicable on a logo image dataset suitable for detecting the classification accuracy of the logos.

1. Introduction

Deep learning (DL), which refers to a class of neural network models with deep architectures, forms an important and expressive family of machine learning (ML) models. Modern deep learning models, such as convolutional neural networks (CNNs), have achieved notable successes in a wide spectrum of machine learning tasks including speech recognition1, visual recognition2, and language understanding3. The explosive success and rapid adoption of CNNs by the research community is largely attributable to high-performance computing hardware such as the Intel® Xeon® processor, Intel® Xeon Phi™ processor, and graphics processing units (GPUs), as well as a wide range of easy-to-use open source frameworks including Caffe*, TensorFlow*, the cognitive toolkit (CNTK*), Torch*, and so on.

2. Setting up a Multinode Cluster

The Intel® Distribution for Caffe* is designed for both single node and multinode operation. There are two general approaches to parallelization (data parallelism and model parallelism), and Intel uses data parallelism.

Data parallelism is when you use the same model for every thread, but feed it with different data. It means that the total batch size in a single iteration is equal to the sum of individual batch sizes of all nodes. For example, a network is trained on three nodes. All of them have a batch size of 64. The (total) batch size in a single iteration of the stochastic gradient descent algorithm is 3*64=192. Model parallelism means using the same data across all nodes, but each node is responsible for estimating different parameters. The nodes then exchange their estimates with each other to come up with the right estimate for all parameters.

To set up a multinode cluster, download and install the Intel® Machine Learning Scaling Library (Intel® MLSL) 2017 package from https://github.com/01org/MLSL/releases/tag/v2017-Preview and source the mlslvars.sh, and then recompile the Caffe build with MLSL: = 1 in the makefile.config. When the makefile completes successfully, start the Caffe training using the message passing interface (MPI) command as follows:

mpirun -n 3 -ppn 1 -machinefile ~/mpd.hosts ./build/tools/caffe train \

–solver=models/bvlc_googlenet/solver_client.prototxt –engine=MKL2017

where n defines the number of nodes and ppn represents the number of processes per node. The nodes will be configured in the ~/mpd.hosts with their respective IP addresses as follows:

192.161.32.1

192.161.32.2

192.161.32.3

192.161.32.4

Ansible* scripts are used to copy the binaries or files across the nodes.

Clustering communication employs Intel® Omni-Path Architecture (Intel® OPA)4.

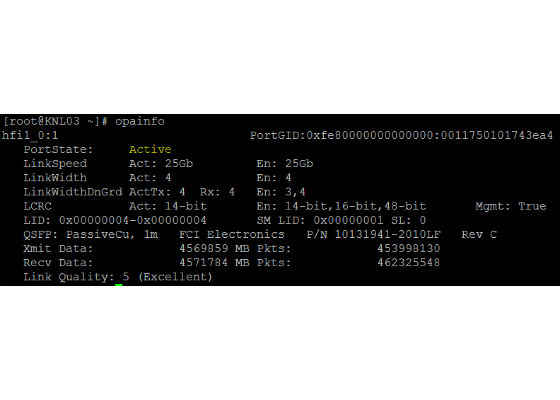

Validation of cluster setup is performed by using the command ‘opainfo’ in all machines, and the port state must always be ‘Active’.

Figure 1:Intel® Omni-Path Architecture (Intel® OPA) cluster information.

3. Experiments

The current experiment focuses on measuring the performance of the VGG16 network on the Flickr* logo dataset, which has 32 different classes of logo. Intel® Optimized Technical Preview for Multinode Caffe* is used for experiments on the single node and with Intel® MLSL enabled for multinode experiments. The input images were all converted to lightning memory-mapped database (LMDB) format for better efficiency. All of the experiments are set to run for 10K iterations, and the observations are noted below. We conducted our experiments in the following machine configurations. Due to lack of time we had to limit our experiments to a single execution per architecture.

Intel Xeon Phi processor

- Model Name: Intel® Xeon Phi™ processor 7250 @1.40GHz

- Core(s) Per Socket: 68 RAM (free): 70 GB

- OS: CentOS* 7.3

Intel Xeon processor

- Model Name: Intel® Xeon® processor E5-2699 v4 @ 2.20GHz

- Core(s) Per Socket: 22 RAM (free): 123 GB

- OS: Ubuntu* 16.1

The multinode cluster setup is configured as follows:

KNL 01 (Master)

- Model Name: Intel® Xeon Phi™ processor 7250 @1.40GHz

- Core(s) Per Socket: 68 RAM (free): 70 GB

- OS: CentOS 7.3

KNL 03 (Slave node)

- Model Name: Intel Xeon Phi processor 7250 @1.40GHz

- Core(s) Per Socket: 68 RAM (free): 70 GB

- OS: CentOS 7.3

KNL 04 (Slave node)

- Model Name: Intel Xeon Phi processor 7250 @1.40GHz

- Core(s) Per Socket: 68 RAM (free): 70 GB

- OS: CentOS 7.3

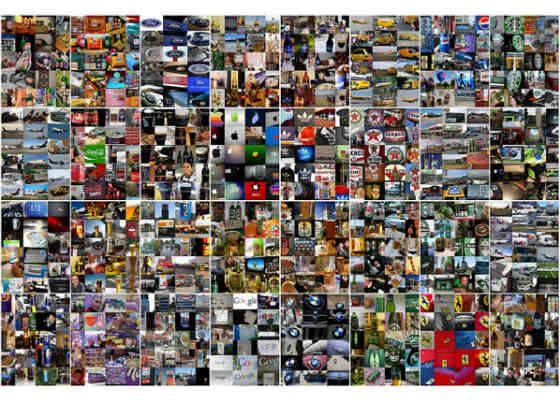

3.1. Training Data

The training and test image datasets were obtained from Datasets: FlickrLogos32 / FlickrLogos47, which is maintained by the Multimedia Computing and Computer Vision Lab, Augsburg University. There are 32 logo classes or brands in the dataset, which are downloaded from Flickr, as illustrated in the following figure:

Figure 2:Flickr logo image dataset with 32 classes.

The 32 classes are as follows: Adidas*, Aldi*, Apple*, Becks*, BMW*, Carlsberg*, Chimay*, Coca-Cola*, Corona*, DHL*, Erdinger*, Esso*, Fedex*, Ferrari*, Ford*, Foster's*, Google*, Guinness*, Heineken*, HP*, Milka*, Nvidia*, Paulaner*, Pepsi*, Ritter Sport*, Shell, Singha*, Starbucks*, Stella Artois*, Texaco*, Tsingtao*, and UPS*.

The training set consists of 8240 images; 6000 images are no_logo images, and 70 images per class for 32 classes comprise the remaining 2240 images, thereby making the dataset highly skewed. Also, the training and test dataset is split in a ratio of 90:10 from the full 8240 samples.

3.2. Model Building and Network Topology

VGG16 network topology was used for our experiments. VGG16 network topology is a 16 weights layer (13 convolutional and 3 fully connected (FC) layers) and has very small (3 x 3) convolution filters, which showed significant enhancement in network performance and detection accuracy over prior art (winning the first and second prizes in the ImageNet* challenge in 2014), and henceforth widely used as a reference topology.

4. Results

4.1 Observations on Intel® Xeon® Processor

The Intel Xeon processors are running under the following software configurations:

Caffe Version: 1.0.0-rc3

MKL Version: _2017.0.2.20170110

MKL_DNN: SUPPORTED

GCC Version: 5.4.0

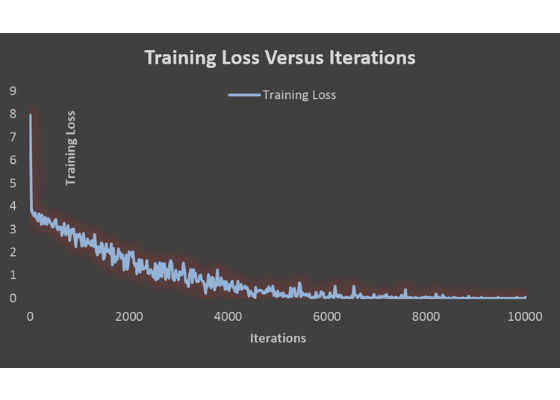

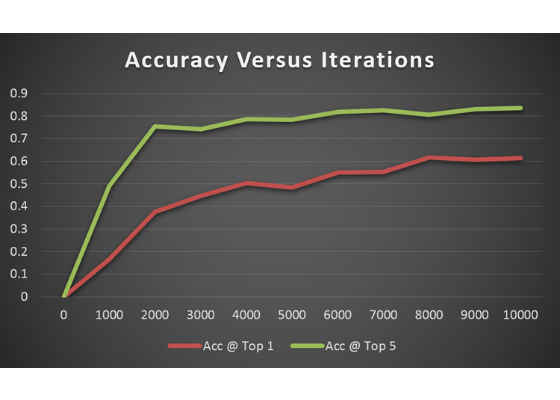

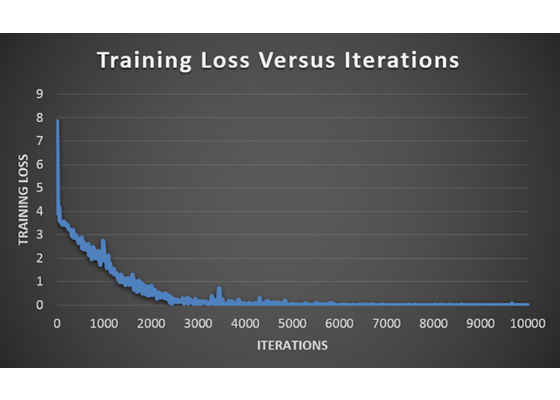

The following observations were noted while training for 10K iterations with a batch size of 32 and learning rate policy as POLY.

Figure 3:Training loss variation with iterations (batch size 32, LR policy as POLY).

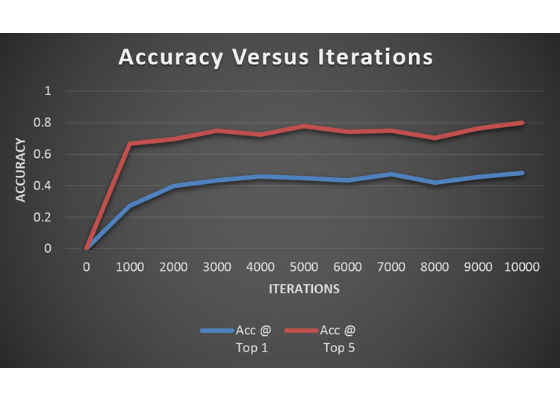

Figure 4:Accuracy variation with iterations (batch size 32, LR policy as POLY).

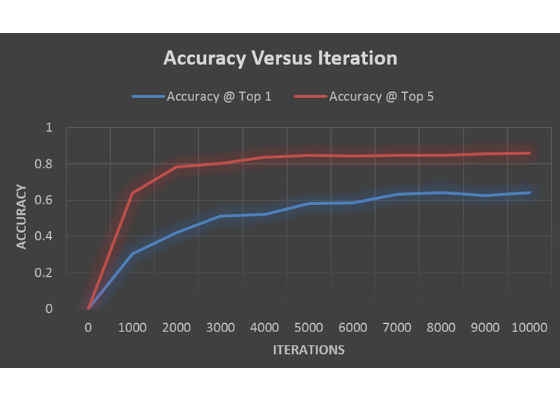

The following observations were noted while training for 10K iterations with a batch size of 64 and learning rate policy as POLY.

Figure 5:Training loss variation with iterations (batch size 64, LR policy as POLY).

Figure 6:Accuracy variation with iterations (batch size 64, LR policy as POLY).

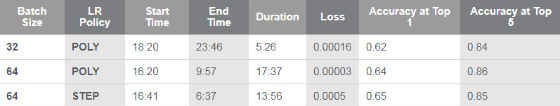

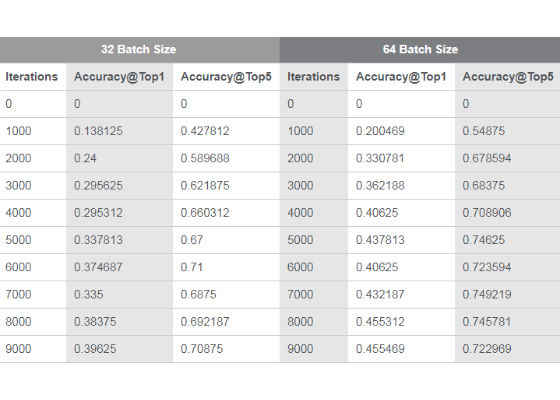

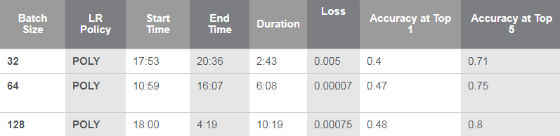

The real-time training and test observations using different batch sizes for the Intel Xeon processor is depicted in the following table. The

Table 2 depicts how the accuracy varies with batch size.

Table 1:Real-time training results for Intel® Xeon® processor.

Table 2:Batch size versus accuracy details on the Intel® Xeon® processor.

4.2 Observations on Intel® Xeon Phi™ Processor

The Intel Xeon Phi processors are running under the following software configurations:

Caffe Version: 1.0.0-rc3

MKL Version: _2017.0.2.20170110

MKL_DNN: SUPPORTED

GCC Version: 6.2

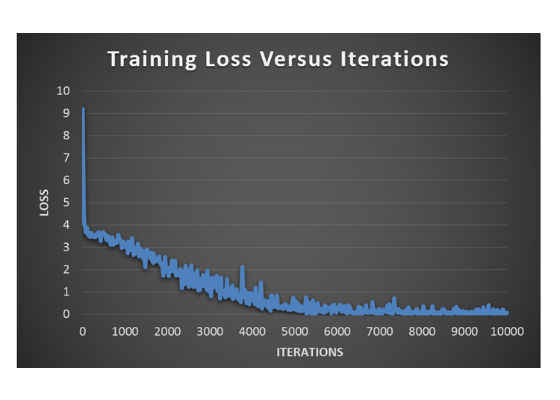

The following observations were noted while training for 10K iterations with a batch size of 32 and learning rate policy as POLY.

Figure 7:Training loss variation with iterations on Intel® Xeon Phi™ processor (batch size 32, LR policy as POLY).

Figure 8:Accuracy variation with iterations on Intel® Xeon Phi™ processor (batch size 32, LR policy as POLY).

Figure 9: Training loss variation with iterations on Intel® Xeon Phi™ processor (batch size 64, LR policy as POLY).

Figure 10:Accuracy variation with iterations on Intel® Xeon Phi™ processor (batch size 64, LR policy as POLY).

Figure 11:Training loss variation with iterations on Intel® Xeon Phi™ processor (batch size 128, LR policy as POLY).

Figure 12: Accuracy variation with iterations on Intel® Xeon Phi™ processor (batch size 128, LR policy as POLY).

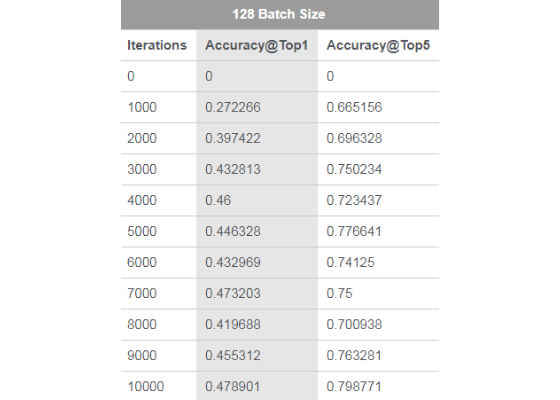

Table 3:Batch size versus accuracy details for the Intel® Xeon Phi™ processor.

Table 4:Real-time training results for the Intel® Xeon Phi™ processor.

5. Conclusion and Future Work

We observed from Table 1 that the batch size of 32 was the optimal configuration in terms of speed and accuracy. Though there is a slight increase in accuracy with batch size 64, the gain seems to be quite low, compared to the increase in training time. It was also observed that the learning rate policies have quite a significant impact on the training time and less impact on accuracy. Perhaps the recalculation of the learning rates on every iteration would have slowed down this training. There is a minor gain in the Top 5 Accuracy with the LR policy as POLY, and this might be due to the optimal calculation of the learning rate. There is a chance that the gain might vary quite significantly in a larger dataset.

We observed from Table 3 that the Intel Xeon Phi processor efficiency increases as the batch size is increased, and also the decrease in loss happens faster as the batch size is increased. Table 4 infers that the higher batch size also runs faster on Intel Xeon Phi processors.

The observations as per the above tables implicates that training in Intel Xeon Phi machines are faster than the same conducted in Xeon machines. Thanks to the bootable host processor that delivers massive parallelism & vectorization. However the accuracy rate produced by Intel Xeon Phi processors is much lower than those produced for Intel Xeon processors for the same number of iterations, so it must be noted that we have to run a few more iterations on Intel Xeon Phi processors as compared to Intel Xeon processors to meet the same accuracy levels.

Source:https://software.intel.com/en-us/articles/object-classification-using-cnn-across-intel-architectures