The Internet of Things—Analytics: Using the Intel IoT Analytics website for Data Mining

Interconnected physical devices represent a whole new opportunity for businesses and individuals to take even greater control of their environment. We can now remotely control our homes, vehicles, and workspaces with nothing more than a handheld smartphone. With this high degree of connectivity, it is now possible to gather large amounts of data about these devices and—more specifically—the information that the sensors embedded within these devices provide. These sensors, such as those for temperature, acceleration, and position, can provide a steady stream of data, but these data have limited utility without a strong, associated set of data analytics. This article and its companion piece explore the Intel® Internet of Things (IoT) Analytics Dashboard and demonstrate how you can use that site to aggregate, organize, and facilitate large-scale data mining from a collection of geographically distributed device sensors.

Survey

SurveyIntroduction

The power of connected devices has grown exponentially. The explosion of mobile devices, ubiquitous networking, and powerful, inexpensive applications has created a wealth of possibilities for individuals to connect in ways never before seen. Who could have predicted the phenomenal growth of social media just a decade ago? In a similar way, we are now moving toward a society where the price of connectivity has dropped to pennies per megabit, wireless communication is virtually ever present, and machines can now communicate with one another directly. This is the world of the IoT.

One growing area of IoT development is the collection and analysis of geographically distributed device sensor data. Consider a collection of temperature sensors embedded in a roadway. These sensors are all connected via wire or wirelessly to a localized

collection point where the data stream is collected, pre processed, and aggregated. The steady stream of information is then fed to road managers, who are tasked with deciding when and where to deploy plows, road treatment, closures, and even temporary detours. Now, consider using these data (and a wealth of historical data collected from previous years) to create a predictive model of how roadway temperature will fluctuate as a result of local conditions. Perhaps a specific bridge has a tendency to ice over before others; an alert to this condition will allow proper treatment, thereby reducing the risk of accidents on that bridge.

The Intel® Galileo and Edison boards were designed and built to provide just this kind of sensor data collection to the general public. A wide variety of sensors can report to the IoT integration board, where the data are temporarily collected and preprocessed before being forwarded for additional analysis. Intel created the Internet of Things Analytics website (IoT Analytics) to connect with Intel Galileo- and Edison-driven sensor networks and aggregate large quantities of time series data for further analysis.

Sensor Types and Applications

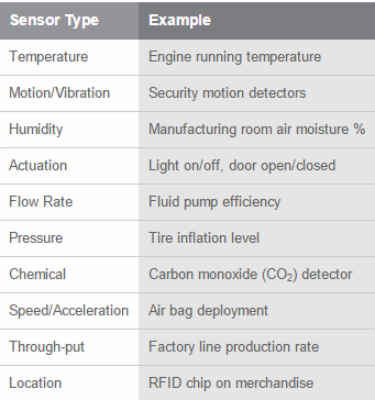

Today, a wide variety of small, inexpensive but powerful environmental sensors can be attached and integrated with virtually any other device (see Table 1). These sensors transmit measurements at a defined frequency (for example, seconds) and precision of measurement. The result of this data generation is a time series of regular time points and a specific value for each point. For example, consider a transmitting radio-frequency identification (RFID) chip embedded in a mine worker’s badge. The chip transmits a continuous stream of time and position data about the worker, allowing mine safety managers to know exactly where the worker is at all times. Such data have clear value if a mine accident occurs, where immediate response is critical to saving lives.

Table 1. Sensor Types and Examples

Sensors can be built to transmit their measurements either over a defined physical connection, such as an RS232 serial interface, or wirelessly using one of several approaches, such as via a Bluetooth* connection. The only requirement is that the sensor be designed for the physical environment—temperature, shock, pressure, etc.—and that the connection be reasonably secure.

Data Collection and Transport

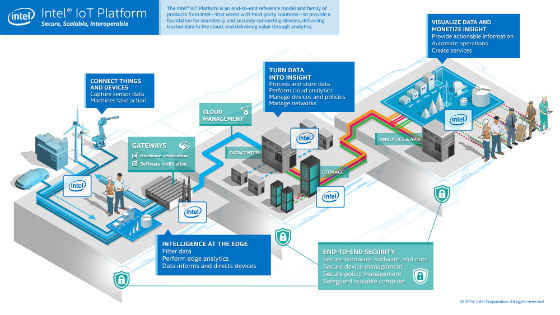

Sensor data are of little utility if they are not collected and transported to a point where they can be reviewed and analyzed. This is the purpose of the Intel Galileo and Edison boards (Figure 1) and the IoT Analytics site. These development boards can be connected (via wired Arduino connections or wirelessly via Bluetooth) to a variety of inputs limited only by the number of physical ports. Multiple boards can be interconnected to aggregate sensor data from a distributed network of sensor end points.

The sensor data stream can be forwarded directly to the IoT Analytics site or preprocessed (to remove spurious measurements or gaps) directly on the board prior to retransmission to the IoT Analytics site. For more information, review the Intel Edison Development Board Summary (see the “For More Information” Section for a link).

Processing and Storage

The collected and preprocessed sensor data streams can now be collected directly at a secondary processing point, such as the IoT Analytics site (Figure 2). The IoT Analytics site provides a workspace (see the ”For More Information” Section for a link to installation instructions and more information) and a suite of tools for collecting, visualizing, processing, and alerting on sensor time series data. On the user dashboard you can see the current collection state of devices, the observations (measurements) taken over the past hour, and a running review of all user-configured alerts that have been triggered.

The companion to this article uses the IoT Analytics web service application programming interface (API) to demonstrate how the collected data can be accessed for data mining and analysis. The example includes a sample application illustrating direct analysis from data collected on the IoT Analytics site.

Qualities of Time Series

As noted earlier, all sensor data consist of a collection of measurements taken at a specific point in time. This time series collection has a set of qualities that makes analysis of these data unique compared with other types of analysis, such as financial or product marketing:

- Regular Interval of Collection (Temporal). Values are collected at defined, regular time points, such as seconds, minutes, or days.

- Sequential Data Values (Dimensional). Values are collected in a sequential manner, with one following the next; the number of measurements in a particular time series set defines the “dimensionality” of the data stream.

- Fidelity (Precision). The sensor values are reported to a well-defined accuracy of measured values (that is, instrument error). Note that the precision of the analyzed values can never exceed the precision of the original measurement—for example, if the measured value is 0.35, the analyzed values cannot have more than two decimal places of significance.

- Range (Amplitude). The sensor values will have an expected range that is determined by the limits that the physical sensor itself imposes (that is, between −20 and 120 degrees F for a temperature sensor).

Each element of a time series data set typically consists of a time–value pair, such as (t)ime = 0.55 sec or (v)alue = 40.5º C. If the periodicity of the sensor data stream is 0.05 seconds, then the next measurement is expected at t = 0.60 seconds, followed by t = 0.65 seconds, etc. This collection can be visualized in a two-dimensional plot, with time as the ordinate (x-axis) and reported value as the abscissa (y-axis). An example plot using the IoT Analytics site is provided in Figure 3.

As you can see in the figure, the IoT Analytics site is capable of plotting time series data in a wide range of formats, including summarized by time period (minute, hour, date, week, month, year), as a line or area plot, and with the capability of “zooming” in on specific data sets for a more detailed view of the underlying data.

Analysis and Modeling

The science of time series analysis has a long and rich history. There are many approaches to processing the raw data that time points and values represent, more than can be discussed here. For a recent review of these techniques, see the article by T-C Fu, a link to which is available in the “For More Information” Section. Regardless of analysis technique, however, there are a few common features to time series data mining approaches.

The raw time series data are only of limited use, such as visual inspection, without further analysis and modeling. Although it is certainly possible to set alarms based on maximum value limits (say, on a statistical basis of one-sigma variation), a more sophisticated approach mines the data for predictive patterns. In the example in Figure 3, imagine that a CO2 vehicle exhaust sensor is reporting measurements over a defined time period at a specific frequency. If this were part of a larger data set that collects multiple sensor data (such as engine temperature and fuel consumption), you could look for patterns by comparing variations in one plot with another to predict future vehicle maintenance.

Regardless of the technique used to develop an analysis model, the raw data must first be modified or reduced to a workable format. A variety of techniques have been developed for time series data manipulation, but one of the most common is called Z-Normalization. This technique converts the time series raw values to values that have a median (average) of 0 and a standard deviation of 1.0. This conversion allows you to compare one time series data set directly with another, greatly simplifying the algorithm’s complexity. The restriction on this conversion is that the data points must have a Normal probability distribution, which is a standard “bell curve” of expected values within a specified range. For most sensor-sampled data, this curve has been found to be true, allowing general use of the technique.

A predictive model that is based on “normal” (that is, nominal control) system behavior is usually required to detect anomalous system behavior. There are several ways to create such a model: using a well-established measured baseline (supervised), applying mathematical or engineering predictions of expected system behavior (semisupervised), or simply comparing the sensor data points against themselves at different times (unsupervised). In the final case, a selected time period is compared with another, perhaps using a sliding window, to check for unexpected values (see “For More Information”).

To create these models, you apply an algorithm or approach against the data streams collected from the sensor. Common techniques include Fourier transforms (conversion of time series values to frequency of occurrence), dynamic time warping (aligning time series data sets irrespective of measurement times), and wavelet transforms (segmenting the time series data into increasingly smaller segments). A relatively new technique, termed Symbolic ApproXimation (SAX), uses a novel technique of converting the time series data to a defined symbolic set, such as letters of the alphabet, which permits the use of a variety of pattern-matching algorithms, including those developed for biologic sequence analysis of DNA, RNA, and protein (for example, bioinformatics).

After you have selected an analytic approach, the next decision is how to detect anomalies. Traditionally, that has been accomplished either by recognizing outliers (called, statistical detection) or by calculating the difference between a “nominal” data curve and the measured series. This is known as a distance measure and is often calculated using the Euclidean or square difference between successive data points. The aggregation of these values provides a quantitative indication of difference between the two curves. If the nominal and measured time series differ by more than a given amount, the time series is flagged for further investigation. In addition, the pattern-matching (and scoring) algorithms developed for bioinformatics research can be used to quantitatively evaluate two (or more) time series.

Presentation

The final section of this article discusses ways to notify interested parties when anomalies are detected in a recorded time series. The IoT Analytics site provides a simple but powerful mechanism for alerting based a set of rules. As shown in Figure 4, you can define a group of specific rules for a sensor associated with a collection of devices. The conditions for rule execution can be evaluated for several common situations:

- Basic Condition. The sensor value changes beyond or below a set limit.

- Time-based Condition. The sensor value changes beyond or below a limit for a defined time period.

- Statistical Variance Condition. The sensor variance (as measured by the statistical value of sigma) exceeds 2 or 3.

- Sensor Change Detection (Single or Multiple). One or more sensors detect a change in condition (such as an activation/deactivation event).

These capabilities is adequate for most situations where sensor analysis is required, but as the next article will show, if a more in-depth analysis is required, then you can use the IoT Analytics web server API to retrieve the sensor data sets and conduct additional data-mining operations.

Conclusion

The proliferation of inexpensive but sensitive sensors and the ubiquitous nature of network connectivity have created the basis for a new way of evaluating and controlling the devices that surround us each day. By aggregating these sensor measurements and applying a set of simple analysis rules, it is possible to act proactively in the event of potential device failure or to more rapidly respond to changing environmental conditions. Moreover, by using a central aggregation site, such as the Intel IoT Analytics site, these sensor data can be subjected to sophisticated data-mining techniques that allow the creation and use of predictive behavioral models.

For More Information

- Intel Edison Development Board Summary, http://www.intel.com/content/www/us/en/do-it-yourself/edison.html.

- Intel Internet of Things (IoT) Analytics Installation Instructions,https://software.intel.com/en-us/intel-iot-platforms-getting-started-cloud-analytics.

- Intel Internet of Things (IoT) Analytics Guide, https://software.intel.com/en-us/intel-iot-developer-kit-cloud-based-analytics-user-guide.

- Fu, T-C. “A Review on Time Series Data Mining,” Engineering Applications of Artificial Intelligence 24 (2011):164–181.

- Wei, L., N. Kumar, V. Lolla, E. Keogh, S. Lonardi, and C.A. Ratanamahatana. “Assumption-Free Anomaly Detection in Time Series.” University of California-Riverside, Dept. of Computer Science & Engineering (2005).

- Camera, A., T. Palpanas, J. Shieh, and E. Keogh. “iSAX 2.0: Indexing and Mining One Billion Time Series.” 2010 IEEE International Conference on Data Mining, pp. 58–66.

- Kampf, M., and J.W. Kantenhardt. “Hadoop.TS: Large-Scale Time Series Processing.” International Journal of Computer Applications 74 No. 17 (2013).

For more such intel IoT resources and tools from Intel, please visit the Intel® Developer Zone

Source: https://software.intel.com/en-us/articles/the-internet-of-things-analytics-using-the-intel-iot-analytics-website-for-data-mining