Smart speakers vulnerable to ‘light commands’ that can be used to shop online, open garage doors and more

A new way of using light-based commands to hack into smart speakers and phones has been discovered.

It was found by researchers at the University of Electro-Communications (Tokyo) and the University of Michigan.

The vulnerability is in MEMS microphones used on smart speakers and light can be used to directly deliver commands.

Using voice commands to control devices is nothing new as most of us are now more or less used to getting answers from a smart assistant on our phones and smart speakers. Google Home and Amazon Echo smart speakers are also commonplace and their market share is steadily increasing since they offer increased convenience of controlling other smart devices via voice. Of course, there are a multitude of privacy concerns when you have a device that keeps a virtual ear out for your commands and sometimes ‘inadvertently’ eavesdrops during your talks, but what if we told you that there might even possibly be a bigger threat looming?

Survey

SurveyResearchers at the University of Electro-Communications (Tokyo) and the University of Michigan have found an unconventional way to communicate with devices that work on voice commands. Takeshi Sugawara, Benjamin Cyr, Sara Rampazzi, Daniel Genkin and Kevin Fu discovered something called Light Commands, which employs modulating an electric signal in the intensity of a light beam. The light beam is aimed directly at the microphone of a smart speaker and can be used by attackers to trick a device’s mic into producing electrical signals as if they are receiving genuine audio, which resembles voice commands given by a user.

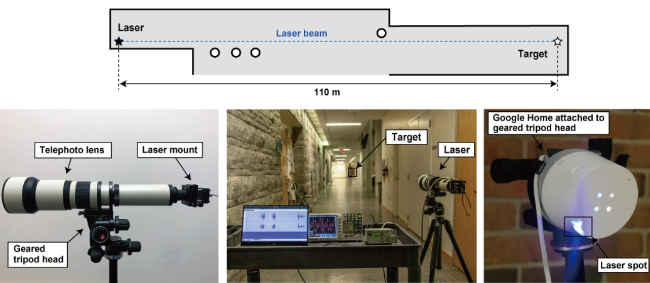

This means that an attacker can potentially use a laser to deliver commands directly to a speaker and this is a worrying critical flaw. As for the distance from where this flaw can be exploited, the researchers were able to deliver commands in a 110-meter long hallway. They used a commercially available telephoto lens to precisely target a speaker’s mic and mounted both, a laser and the speaker, on tripods to increase accuracy. The test conducted by the researchers worked on some of the most popular devices like Google Home, Home Mini, Amazon Echo Plus 1st and 2nd Gen, Echo, Echo Dot 2nd and 3rd Gen, Echo Show 5, iPhone XR, iPad 6th Gen, Samsung Galaxy S9, and the Google Pixel 2.

While there is no mitigation for the vulnerability, the researchers do mention that the manufacturers of such smart speakers can use sensor fusion techniques. This entails using audio from two mics instead of one so that it is difficult for an attacker to use two laser beams precisely and a command picked up by only one mic can be discarded as an injected command.