All you need to know about smartphone camera technology

From blurry VGA cameras to present-day digital camera competitors, we take you through the hardware constitution of smartphone cameras, as they stand today.

Smartphone cameras are possibly the most frequently-used technology of today, catapulted to importance with the lateral rise of social media. With increasing number of users craving for cameras with superior imaging capabilities on mobile devices, companies have also taken it upon themselves to up the game, innovating in the space of mobile camera sensors, focussing techniques and optical stabilisation mechanisms, alongside using wider lenses and laser-assisted autofocus modules. Here, we take you through some of the latest, hardware-level innovations done in the field of smartphone cameras, to take them forward into the future. To make an important note, most of these technologies were once reserved for cameras only, and are only recently beginning to make their way into the smartphones.

Survey

SurveyRGBW Bayer filters in Sony’s IMX3– series sensors

Smartphone cameras have always used CMOS (Complementary Metal Oxide Semiconductor) image sensors, seeing that CCD sensors have always been more power-consuming and expensive. In general terms, a CMOS sensor comprises photo-detector pixels that absorb information from incident light in the analogue form. A step-up transformer then amplifies and converts the signal into the digital form, containing luminance (brightness) data. To get chrominance (colour) data, an RGBG Bayer filter is then applied, following which the interpolation algorithm of the camera’s image signal processor converts this data into the final image.

Apart from increasing the number of photodetectors (equal to the number of pixels) on image sensors, Sony went ahead and applied the logic of PenTile Matrix displays on its camera sensors – replacing the RGBG filter with an RGBW filter. What this essentially does is replace the conventional green sub-pixel on image sensors and replaces it with a white sub-pixel. This facilitates a higher magnitude of luminance across the image, along with slightly compressed colour information. The higher magnitude of brightness, combined with the tuned colour channel, leads to more accurate colours, better processing of light, and better reproduction of overall details within one photo. The chroma (colour) details on photographs clicked by RGBW filter image sensors also have colour details that can be better received and processed by the red, green and blue-sensing cone cells of the human eye, thereby leading to a perfect optimisation of colours, brightness and details on images.

Sony’s IMX3– sensors, notably the ones used on iPhone 6s and Oppo R7 Plus, are equipped with RGBW technology to process images with better colour details. Shortly after Sony, Samsung also unveiled its BRITECELL sensors, that employ imaging technology with the same principle. With better focussing technology being developed, the RGBW filter image sensors are a perfect match for PDAF or Samsung’s Dual-Pixel technology, explained below.

Dual-Pixel by Samsung – Phase Detection for every pixel

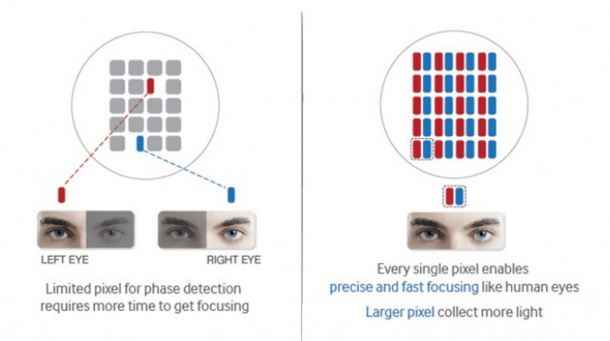

Dual-Pixel is essentially Phase Detection Autofocus (PDAF) mechanism, only multiple times better (and faster, of course). While a number of smartphone cameras advertise PDAF, what they essentially use is a hybrid autofocus mechanism – a combination of contrast AF and PDAF. In traditional PDAF, about 5-10% of photodiodes on an image sensor act as phase-detecting agents to trace focus on a subject. While this was efficient, Samsung has simply gone ahead and used the entirety of photodiodes on a sensor to employ phase detection autofocus.

To illustrate this technology, the existing PDAF technology employed the photodiodes corresponding to pixels in a way such that they functioned as separate, left and right human eyes. In Dual-Pixel technology, both the sections of the image sensor work in tandem to fix on the subject, thereby mimicking the working of the human eye. This leads to a much faster performance of focus, and coupled with the RGBW filter image sensors, can perform beyond anything that we have seen on smartphones, yet.

4-axis OIS on smartphones

As of now, Xiaomi has included 4-axis optical image stabilisation on the Mi 5. Yet another technology that is trickling down from cameras to smartphones, 4-axis OIS does not only use 2-axis transversal image stabilisation, but also stabilisation on a rotational axis. This not only gives a more stable imaging and video performance, but also ensures that your camera performs much better in low light conditions than the ones using 2-axis OIS.

Deep Trench Isolation on image sensors

With photodiodes corresponding to pixels absorbing lights, photons often leak out through the edges, leading to leakage of light and interference in imaging performance. With Deep Trench Isolation, a layer of light-insulating material is applied in between singular pixels to absorb to excess leakage of light, thereby clearing the sensor area of aberrations and leading to better imaging performance. Yet again, Xiaomi has applied this to its latest flagship, the Mi 5.

P.S. We cannot wait to get our hands on it.

Each of these hardware trends combined have the potential to take smartphone photography the one crucial step forward, aided by the application of wider lenses (f/1.7 on Samsung Galaxy S7/S7 Edge) to facilitate better low light performance, superior stabilisation and more true-to-source colours never seen in smartphones before. With a steady in-flow of dedicated camera technology trickling down to smartphones, we stand to witness the time when a smartphone decidedly performs better than a dedicated camera worth its salt.

Game on, it seems.