Will robots ever cry?

Bots are cruel and heartless, following cold hard logic. But can they ever be programmed to understand emotion, feelings and other sentimental stuff? Let’s find out...

In 1837 Charles Babbage came up with the Analytical Engine, essentially the first machine design that laid claim to include all the elements of a modern computer. Back then Babbage’s machine was designed to compute, crunch numbers and perform calculations. Today the landscape of modern computing has undergone a paradigm shift. Sure, we have computers that are fast and mean machines designed purely for number crunching, but now they can do so much more.

Survey

SurveyTraditionally the dividing line between a human and a computer has always been the ability of the former to process information to adapt and learn. But artificial intelligence, built on the bedrock of Turing’s and Von Neumann’s work, can now simulate human behaviour in not only absorbing, storing and even making sense of information. This ability to learn has allowed machines to be adept at image recognition, speech and text recognition and even games like Chess and Go. But a question that has been plaguing scientists and futurists alike for sometime is: Can machines understand emotion?

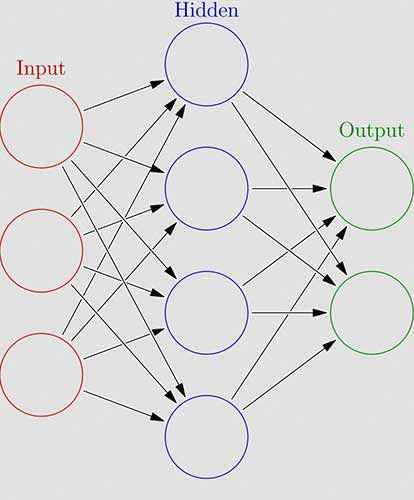

Machine learning: Artificial Neural Networks

Before we move on to what understanding emotion is and will mean, let’s talk a bit about the techniques ‘intelligent’ machines currently use. To provide computers with intelligence, researchers look at a structure that has time and again proven itself to be a pinnacle of complexity and learning ability – the human brain. Nestled within our brains are a multitude of neurons, each of which interact and communicate in an intricate fashion to give us memory and processing abilities. But memory and processing components lie within every CPU box as well. What makes a human brain radically different from the world on a motherboard chip is that the neurons in the human brain network in a fashion that allows them not only to respond to stimuli, but also to continually learn from the input information.

So, scientists replicated the working of a human brain on an algorithmic level and that led to the birth of an Artificial Neural Network (ANN). What an ANN essentially does is study a large set of input-output combinations to train itself, much like a child learns to identify an apple by seeing it a number of times. Once the network is trained, each input is processed and a new input is mapped to an output. Over time, because of feedback, and much like ourselves, the network corrects errors and gets better at dealing with inputs that weren’t part of the original training set.

A schematic of the node representing neurons in a simple neural network architecture

This process can be broken down with a simple example. Any image is made of numbers, bytes representing values of color intensity for example. Now, an image of a red ball will have a certain input matrix of numbers. The fact that it is a ball can be represented with another unique marker matrix. The job of an ANN is to breakdown an image into raw data – an input function and after processing that information through its vast sieve of hidden layers, construct a corresponding output function. The output function will tell the ANN if the input corresponds to a ball or a cat.

The same fundamental method is adopted by all deep learning machines. A chess move can be evaluated by comparing the ‘value’ of eventual outcomes, and thus the machine can indeed learn to play and even master chess. Of course, this task is not as easy as it is being made out to be in this description, but there’s an interesting takeaway from all of this.

Machines today can teach themselves, given what they have to learn is in a ‘language’ they understand. The language being data in terms of numbers, further broken into bytes and bits of ones and zeros. It is here where we can pose the question: Can human emotion be represented by a matrix of numbers? Can we truly encapsulate the intricacy and subjectivity associated with something as elemental as emotion in a number? Also, do we really need to?

What’s a machine’s EQ?

Emotional Intelligence, usually shortened as EQ is broadly classified as the ability to recognise your own and other people’s emotions, to guide our thinking and level of interaction. For machines to have emotional intelligence they must be able to read and differentiate between emotions and respond correctly. A technique called natural language processing allows machines to interpret text and understand its meaning. Image processing tools have gone hand in hand in development of massive computing and gives machines the ability to ‘see’.

But what about the subtext? What about an underlying emotion that leads to a certain input? Unfortunately, machines are no good at that. Atleast not yet. For a machine to be classified as an emotionally intelligent one it must be able to not only interpret or read an input but also get an estimate of what the input signifies and what makes it different from other inputs. Humans are adept at sensing emotion. When we get a reply – “No!” from a person, our brain registers it as a word from the English language that means something, but we also understand the difference between a no said in exasperation and one that is a curt refusal. Our response is according to to the emotion we recognise.

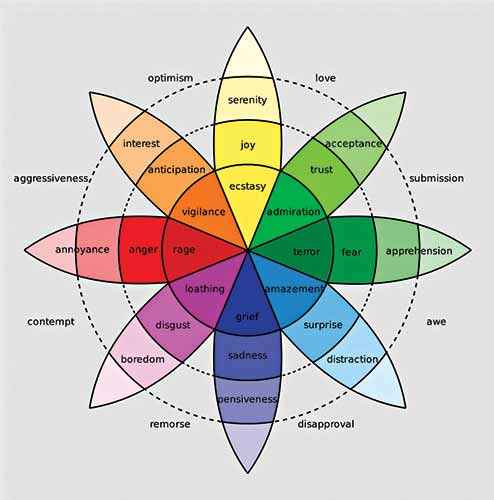

Unlike language which has been shown to follow certain structures, or images which are essentially matrices, emotions are not simple constructs and are thus not easily tenable to the mathematical treatment that scientists so much like to mete out.

So, therefore, is the quest to develop emotionally intelligent machines a hopeless one? Maybe not.

Can today’s machines grasp emotion?

The advances made in computer vision, clubbed with decades of research in artificial intelligence has made the dream to achieve emotional intelligent machines, dubbed affective computing, a possible one. Image recognition softwares today are powerful and smart enough to recognize traditional markers of emotion like smiles, sulks and frowns. These facial features, often general in nature are then tracked and mapped onto a certain set of emotions. This sort of non verbal expression tracking has indeed been successfully used to study how receptive students are to MOOCs and garner feedback to improve online course content.

Psychiatric studies have successfully managed to track depression in patients using the very same formula of non-verbal behaviour tracking. The relevance of using facial recognition goes beyond the specific applications mentioned here. If systems can recognise emotions by facial tracking it would lead to novel methods for medical diagnostics. Educational technology, especially for young kids who are more emotive, can be bolstered with animations being designed for real time changes keeping in sync with the child’s emotions.

Psychologist Robert Plutchik’s wheel of emotion, an effort to simplify the concept of emotion

Emotions can also be tracked from voice data. Human speech has a plethora of markers for emotion: pitch, volume and tone being some of them. While a very basic level of voice based emotion tracking has existed in the form of tele-support systems, recent advances in machine learning has made it possible to develop more sophisticated and robust systems. The possibilities that open up with voice based emotional tracking are numerous to say the least.

Voice based assistants in our phones can be transformed from a useful to indispensable tool. Imagine a scenario where Siri is not only able to tell you the nearest hospital but also sense the urgency in your tone and take necessary action. With the kind of rapid development seen in mobile computing hardware it is not too farfetched to envision a future in which a humble phone comes with a voice assistant that can sense emotion, converse, make decisions and on the back of a powerful ANN, actually learn from its conversations. Companies like Google are at the forefront of this new and exciting subset of AI.

Moving beyond voice and expression based systems even simple texts can sometimes give away emotions. For a company that centres its business around a search engine this means a big deal. Figuring out the actual context and intent of a search query has attracted much attention of late, especially since Google announced a transition to Hummingbird, triggering a buzz around what is now called as semantic search. All the above technologies, impressive as they may seem, don’t draw up an intimidating figure as of now, only invoking an image of a drab computer in a dingy room going about its business. How far are we from humanoids that are an aggregation of the systems described above, possessing the ability to sense, understand and even replicate emotion?

As far back in 2010, European scientists made a humanoid called Nao that could learn from human interaction, sense mood and even develop a personality of its own. Last year Softbank unveiled Pepper, claiming it to be the world’s first emotionally intelligent robot. With the inclusion of big players, it is not long before we see improved variants that employ techniques that are much more superior than the ones currently in use. The rise of machine that can recognise and respond to emotions however can have some troubling consequences too. How close are we before we create a sentient AI that goes all Terminator on us?

Pepper bot in a retail environment

The difficulty in programming emotional machines

Luckily (or not, depending on which side of the debate you are on) current technology is far from replicating or recognizing human emotion in its true glory. Ultimately almost every machine learning system relies on finding patterns within the vast amount of data input that it perceives. Current machines are good at sensing pixel data or parsing text or sound information to find patterns within them. A machine can see a group of smiling pictures and correlate it to a happy group of people, but what happens when this correlation fails because one or more persons were faking emotion?

Current models of emotion detection largely depends on sensing physiological markers for emotion, like a frown might correspond to dissatisfaction and an open mouth, disbelief. But human emotion is far too complex to lend itself to this treatment. A machine can detect a quickened pulse or heart beat and subsequently match it to a state of fear. But what if the heightened state is excitement or love?

A recent study asserts that when computers learn human languages, they also pick up the large range of biases that come with it. Emotion as a concept doesn’t generalise easily due to which modelling emotion is a headache for scientists. Then there is the problem of replicating human emotion. It would seem that human emotion expression is simply coordinated muscle movement. But even that muscle movement is a difficult act to pull off in a machine. What’s more, machines also need to circumvent the ‘uncanny valley’, a sense of discomfort that settles in humans when they see ‘human like’ behaviour in an obvious non human.

Last but not the least if we truly need affective computing to trickle down to our daily lives it would mean an overhaul in terms of hardware optimization. Machine learning algorithms are power and memory intensive and implementing deep learning tools requires massive computing power. Our chips need to consume less power, store more in less space and work faster.

A human like robot (Repliee) usually triggers high neuron activity due to a phenomena called the uncanny valley

AI’s have always occupied the fancy of writers and scientists alike. In some form or another, AI’s, even emotional AI’s have appeared in pop culture for quite sometime. It comes as no wonder that a robot that we could talk to, like we would converse with our human friends, sounds like a natural extension of normal robots and in general seems like a good idea. But making a machine so close to a human that it even feels like one, can have repercussions that are chilling to even think about.

If a machine can anticipate our actions it might even influence it. And, if we grant an inanimate object the power to animate, we are giving birth to a whole new species, an intelligent one at that. An emotionally intelligent AI might very well be great for us. But if literature and film mirror our future, we may be better off without one riddled with emotionally intelligent machines.