Origins and the history of Input Devices

From punch-cards to virtual hands, Input devices have come a long way. Let us find out how.

When asked to picture a computer, here is possibly nobody who will do so without including the ubiquitous keyboard and mouse. In fact, many of us would probably recall the peripheral first and the rodent later, upon hearing the word “mouse” – a quick google search will reveal how true this is. But computers weren’t invented with input devices in mind, atleast not the ones we use now. With the ease of gliding our hands on our touchscreens or directly talking to our devices, it is very easy to forget the evolution of input devices in the last century since computing devices were introduced. From punchcards to motion detection, let us see how we arrived here.

Survey

SurveyJust the right buttons

Key punches were around since as early as the 1800s in the form of Jacquard loom cards. Although there were other earlier ways to input information into a computing device like switches and dials, the keypunch and the punch-card methods were the first to have widespread usage for entering, storing and retrieving data. Around 1890, Herman Hollerith developed the first key punch device to tabulate the results of the 1890 Census in the USA. Since the census had 80 Questions, the key punch cards had 80 columns – a number that carried his legacy in the form of 80 column computer screens for decades as well as the company he created, which later became part of IBM.

A step further, multiple punches could represent letters and numbers, which were also written on top of each column so that the cards could be read normally by eye. Re-programming or re-typing involved removing and rearranging cards or sometimes inserting entirely new ones into the sequence.

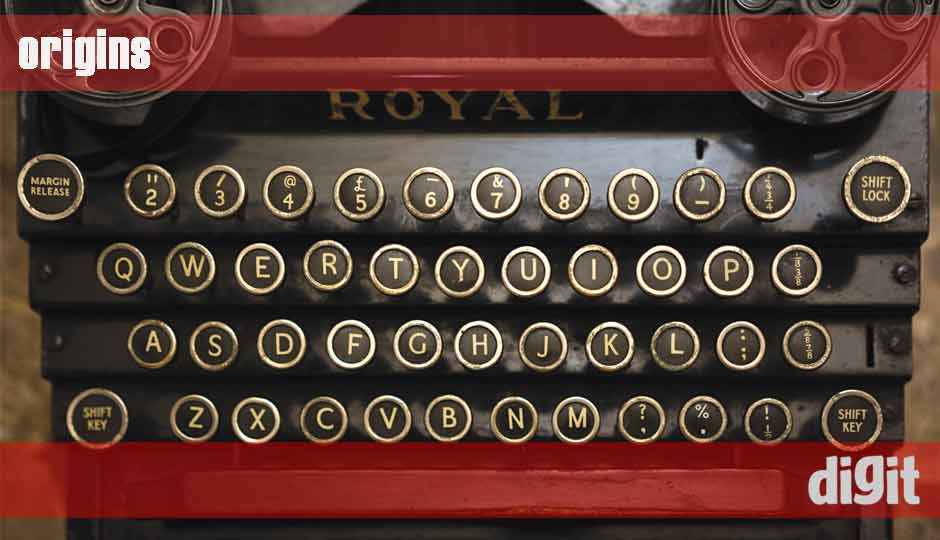

Derived mainly from the teleprinters and key punches of the yesteryears, keyboards made inputting information a very simple task of re-keying the statement compared to the complete removal and reinsertion of cards in the punchcard system. The initial design was inherited from typewriters as the QWERTY layout, which was never really changed although alternatives, like Dvorak layout, claim better speed and lower fatigue. In fact, there are a number of features about the current layout that are obvious shortcomings, like the single handed typing of many common words (was, were), left handed dominance, letters frequently used together not placed close etc. In spite of all these, the alternatives didn’t really catch on.

Actually, there are vestiges of the keyboard’s usage as a pre-mouse era input device that are still evident on most present day keyboards. Case in point – the scroll lock; Have you seen anyone actually using their keyboard to scroll anymore? Another example is the System Request (SysRq) key, from mainframe days.

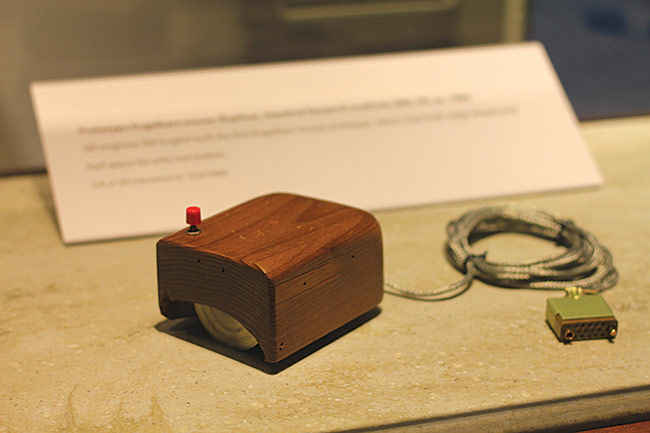

A widely accepted rodent

The technical innovation behind a computer mouse was already in place even 50 years ago. Douglas Engelbart and his lead engineer Bill English made their first working mouse prototype in the 1960s in their lab at the Stanford Research Institute (SRI). They actually named it the mouse due to the cord extending out of the back portion of the device, which looked similar to a tail. This entire idea had evolved from earlier developments of a trackball, which involved multiple rolling discs with a rolling ball. Whenever the ball was rolled, the movement of the connected discs created signals at contacts with wires in the device. Each contact conveyed a certain movement to the digital computer which used that to plot the position of the pointer on the display.

The Engelbart mouse

It was many years later that the mouse was used famously with the Xerox Alto at the Xerox Palo Alto Research Centre.In 1979, it was this very same PARC lab that was (now famously) toured by Steve Jobs, and is said to have been the source of inspiration for the mouse-driven interface that Apple eventually developed for the highly succesful Macintosh computers released in the 1970s.(and as some would say, the Microsoft Windows interface as well)

Optical mouses were made affordable by using a rubber tracking ball that worked in a way similar to the original trackball technology. Also, most mouse model designs have used either a two button or a three button interface, with the exception of Apple that had stayed with a simplified one button interface through and through. Further developments saw the inclusion of a scroll dial, extra buttons and even gyroscopic capabilities in some models that detected hand waves.

A touching moment

Many of us would be surprised to know that the touch and tablet based interface was actually invented before the modern day mouse. In the 1950s and 60s, such devices were mostly used for the input of graphical data into computers, much like modern day digital pens. In fact, Apple had its own touch based tablet interface way back in the late 1970s. The Apple Graphics, which was designed by Summagraphics and also marketed as BitPad, was initially sold as an accessory with the Apple II Personal Computer. Actually, this device wasn’t a standalone tablet in the likes of today’s tablet PCs. Instead this was an interface for graphical input for the Apple II. The interesting fact is, this predated the Apple II’s mouse by almost 6 years.

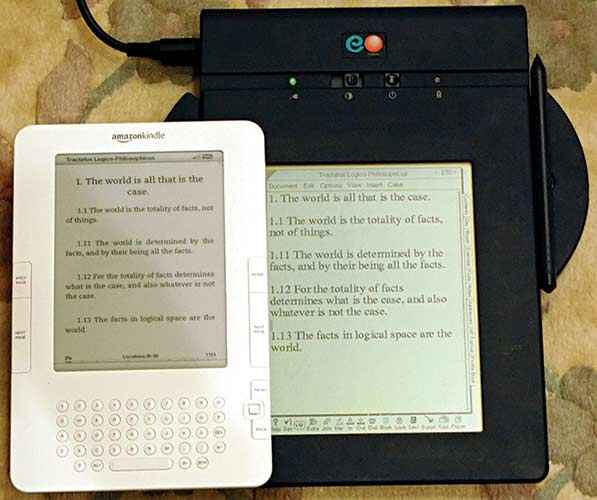

A fast forward to 1993 brings us to one of the most interesting devices on the touch front – The Eo Personal Communicator. It was a notebook sized fullscreen touchscreen device that ran PenPoint OS, one of the first operating systems to be designed targeted towards the use of styluses. Unfortunately, the company was shut down after it failed to meet revenue targets set by AT&T that had recently acquired it.

The Eo Personal Communicator next to the Amazon Kindle

As the components became smaller and more powerful, the desire for smaller compact devices gave rise to the Personal Desktop Assistants, Or PDAs. As the touchscreens went smaller, the mode of input had to be redesigned as a full keyboard would no longer fit on the handheld PDAs. This requirement gave rise to new and innovative methods of input on these devices. One such method was used by Palm in its PalmPilot range of products back in 1997 and it was called Graffiti. Each device had a touch sensitive area to detect stylized characters drawn with the included stylus. There was also a tutorial game included which taught players how to draw stylized versions of characters falling on the screen.

The feature phones arrive

PDAs were somewhat expensive for general usage and with components further reducing in size, there was an opportunity for feature phones to rise and become popular devices all around the world. This also gave rise to one of the most used forms of communication worldwide – texting. Since phones had to use physical buttons, it became a challenge to include an entire keyboard with full features at the size of the keypad. There were many suggested methods and the one that stuck was the one that is still seen on keypad phones. A single button press indicated the first letter for that key, two presses for the second letter and so on. As phone screens grew larger, this system grew more sophisticated with the inclusion of dictionaries on phones as well as the T9 system that began predicting words based on the letters being typed.

One of the unique innovations on this front was from Blackberry. They included a full featured keyboard on the phone with buttons large enough to be used with the thumbs. This keyboard is still very popular with frequent texters and is often included as a slide-out under the original phone.

The Blackberry Torch keyboard

Touch 2.0

While we already know that touchscreens and touch screen devices weren’t really invented quite recently, the innovation that would be the next big breakthrough was still in the making even a couple of decades ago. A pioneer gesture recognition tech company, FingerWorks, released a range of multi-touch products in 1998 including the iGesturePad and TouchStream keyboard. This company was acquired by Apple in 2005.

Less than two years later, in 2007 Apple released the iPhone with the most innovative touchscreen technology anyone had ever seen, especially on a handheld device. The phone interface was completely touch based, with every part of the screen having a functionality on every interface in the OS, including the complete virtual keyboard.

The widespread usage of the stylus changed when Apple implemented a multi-touch capacitive touchscreen on the iPhone, which was highly sensitive and could detect several contacts at once to allow gestures like “pinch” and “zoom” in the OS. The entire OS was designed to take advantage of this touchscreen with completely new ways to use standard applications like the calculator, camera, games etc. Before we realised, gestures like swipe to scroll, inertial scrolling, long-press, press and drag became commonplace implementations on any and every touchscreen device and mechanisms like pattern locks, tap to focus in camera, gesture based commands became commonplace. Apple changed the phone standards overnight and stylus based touchscreens almost immediately became obsolete. This widespread adoption is still evident in the regularly ongoing copyright infringement lawsuits between Apple, Android mobile manufacturers and Windows mobile vendors, all fighting over the same market.

| Virtual Reality |

|---|

| We are quite aware of Virtual reality being the next big computing interface at present. With its completely different approach to interaction, it is evident that there are new methods of input. The HTC Vive uses controllers that can be detected by its lighthouse sensors and onboard cameras to mimic hands in the virtual reality. There are other alternatives that can directly sense your hands as well. A combination of these sensors along with modification in the platform could very well make the future input devices completely virtual as well. |

The Interfaces of the Future

Many of us remember the announcement of Microsoft Surface, the tabletop gesture based computing interface that completely felt like science fiction at that point of time. Currently, it is used widely by major corporations for several uses. It is not a surprise to see other devices and manufacturers also bringing such touchscreens and tablets to be used on tabletops and public installations, like the entertainment screens on flights, or the ticket kiosks at stations. Voice based Input almost plateaued in the 90s at about 80% accuracy. The recognition systems could only work well with a limited universe of languages, that too with most of the results being statistical guesses. Windows Vista and Mac OS X included built in voice recognition capabilities. This feature needed to be set up with a separate microphone peripheral and did not have the ease of use of the keyboard and the mouse yet.

Innovation in speech recognition and voice commands came back into the forefront with Google’s Voice Search App for the iPhone. This development was highly significant for two main reasons – the first one being the incentive present to provide alternative methods of input for phones, with the screen size being a limitation to include full scale keyboards, and other one was that Google offloaded the actual computational part of the recognition process onto its cloud infrastructure, harnessing that massive computing power to analyse the details of the huge variety of human languages available out there. To this computational power, Google added the data available from its billions of searches to predict what you’re probably saying.

Siri, best friend to many iPhone users

Gradually, Google added voice recognition to the entire Android ecosystem and currently Google English voice search includes almost 230 billion words. Alongside that, Siri has definitely made a mark in speech recognition by adding personality into the responses. It takes what it knows about you to give contextual replies that are even funny from time to time. This level of speech recognition is not just limited to phones anymore, as many devices, including home automation hubs are now including speech recognition and even basing their entire design on that feature at times. Pretty soon, you will be talking to your coffee maker and asking your speakers to keep it low.

This article was first published in the May 2016 issue of Digit magazine. To read Digit's articles first, subscribe here or download the Digit e-magazine app.