Moore’s Law: Fifty years of driving innovation

After Moore's proposition drove technology forward for more than 50 years, it's time for innovation to take a quantum leap

Here’s a thought: if the number of people in your house were to double every year, you will end up with 512 people in ten years, provided you started off living alone. Here’s another one: if you were spending a thousand bucks for yourself in year one and thousand bucks for 512 people in year ten, that would mean less than two bucks expense per person. Lots of savings, maybe, but your house will be somewhat overcrowded, possibly leading to fights and struggles.

Survey

SurveyThe probable future of Moore’s Law is similar.

Back on April 19, 1965, Gordon Moore, a researcher at Fairchild Semiconductor wrote a paper predicting the pace of growth of technology. He said, transistors on a single chip will double annually, while cost of the chip will remain constant. This was fifty years ago, when technology was nascent. Moore’s prediction was based on observations drawn from components used per integrated circuit (IC), and respective minimum cost-per-component, between 1959 and 1964. In 1975, he predicted that each chip will contain up to 65,000 components, doubling annually within the time span. From 1975, technology more or less followed Moore’s observation. His predictions became a reference point for researchers and developers to push processing power and mobility solutions beyond existing threshold.

But there comes time when everything reaches its peak. At the 2011 International Supercomputing Conference it was conceded that the Moore’s Law might not be sufficient to drive processing innovation rapidly forward any longer. We are at a time where simply adding more transistors to a chipset and increasing the number of cores in a processor does not ensure viable progress in technology. Most of our tasks run neatly in both PCs and mobile devices sporting four-core and eight-core processors. The 12-core and 16-core processors of the future may be theoretically feasible, but do we really need them?

There is an issue with simply adding transistors to a chip. Once the number of layers of components in a chipset hits a certain threshold, Heisenberg’s Uncertainty Principle will kick in. This will lead to a breakdown of subatomic particle paths, causing electron leaks and shorting circuits. Massively powerful processors that can melt down any time? Certainly doesn’t sound like the future.

The Impetus and the Drive

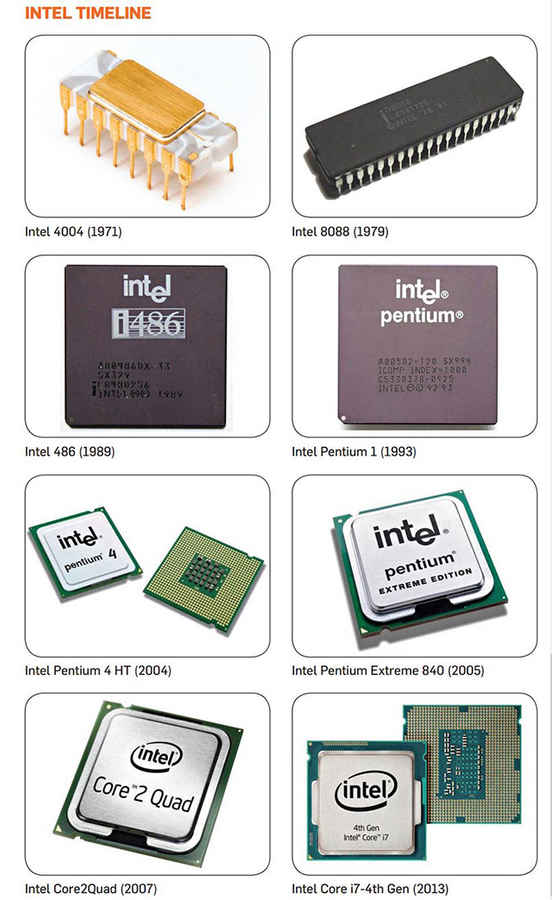

The Intel 4004, Intel’s first microprocessor, featured 2,250 transistors back in 1971. Three decades later, the Intel Pentium 4 HT processor sported 125 million transistors – more than 50,000 times more than the Intel 4004.

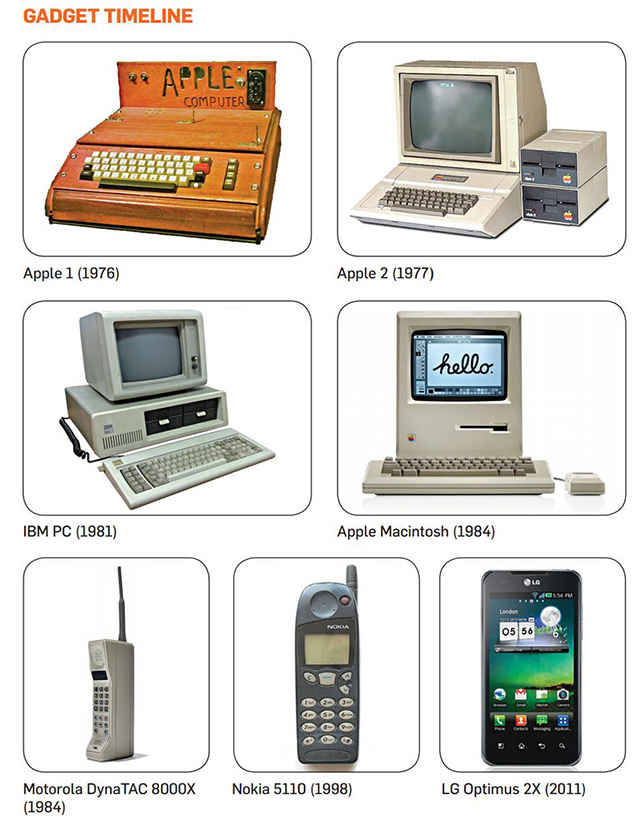

In 1974, Robert Dennard at IBM presented transistor scaling equations to reduce operating factors along with dimensions, thereby shrinking transistor sizes. Another major milestone of Moore’s form factor-shrinking prediction is Apple’s introduction into the technology world. The co-founder of Apple, Stephen Wozniak, designed a device in 1975 that would go on to become the Apple I Personal Computer, which was followed by the Apple II. These two personal computers raised microprocessor and chipset demands, calling for redesigning of circuit boards and shrinkage of component sizes. In 1979, Intel introduced the 8088 processor featuring 29,000 transistor units.

The Intel 8088 notably featured in the now-legendary IBM PC, back in 1981. A year later, Intel revealed the 80826 processor (with 1,34,000 transistors), keeping up with Moore’s prediction of the rate of advancement of technology. Components were not only getting smaller by dimensions, they were becoming more efficient in terms of energy, expenses, assembling and optimum power supply. Towards the end of the ‘80s, Intel unveiled the Intel 486 processor, with 1.2 million transistors – by far the most advanced microprocessor at that time.

The ‘90s were the era of Intel’s Pentium, alongside the popularisation of portable cellular communicators. Although the Motorola DynaTAC arrived in 1984 it wouldn’t be until the mid-90s that ‘cellphones’ became popular across the world. By then, the Nokia 5110 Communicator became a beacon of advancement in technology. In 1999 came Intel’s Pentium III series of processors, running on 28 million transistors.

With faster processing possible due to massive number of processing components being fitted into chipsets, the rest of the technology world started growing around processor advancements. Coloured displays and graphic-rendering processing units started becoming commonplace. “This is not going to stop soon,” Moore famously stated about his own “law”, in 1995. And it didn’t.

Stepping Down

The mid-2000s and onwards on saw the advent of multi-core processor into daily life, which trickled down to cellphones later. Advanced Micro Devices (AMD) were the first to showcase a dual-core processor in 2004, although Intel happened to release the first commercially-available dual-core processor in 2005 – the Intel Pentium 840 Extreme Edition. The world’s first cellphone to ever run on a multi-core processor was the LG Optimus 2X, powered by the dual-core nVidia Tegra 2. These advancements not only signify technical achievements – they empowered the world to achieve further in terms of software, augmentative realities and cognitive branches. Graphic designs started improving drastically, researchers started using multi-core platforms to synthesize more data, advancing medical science, space research and immensely powerful setups capable of powering multiple facets of life.

It is in this phase that the Moore’s Law started being threatened. Processors have now reached a stage where adding more cores and components do not drastically improve performance because our usual tasks run their fastest already on existing architectures. Technology has started developing away from the Moore-centric drive. The Moore’s Law, in its actual sense, is not a “law”. It does not have a prolonged scientific or natural recurring occurrence. It is more of an observation, that predicted that path of five decades of technological progress. However, silicon chipsets can only be this powerful, and adding more transistors to a processor will not considerably improve performance any further. Additionally, processor clock speeds have come to a standstill. This is because transistor speeds have not really increased much over the last few years, and power consumption optimisation at higher clock speeds cannot be attained. As a result, more data cannot be processed in a single clock cycle. Chipsets have huge lengths of internal wiring, and process impulses are scientifically impossible to be pushed beyond the speed of light. Light traverses four meters in a nanosecond, and no law can push beyond that.

To look for alternatives to silicon computing, researchers started looking at nanotechnology and molecular computers, completely driving away from Moore’s Law. Molecular computing developments involve IBM dabbling with oscillating circuits based on a single carbon nanotube molecule, wherein the entire circuitry depends on a single carbon molecule to speed up processing. This does not rely on the relay of electric impulses through wires to work out processes. Quantum computing techniques are the future, and they drift away from silicon transistor-based computing. Still at a nascent stage, quantum computing replaces electronic binary-coded data with qubits. The quantum bits are quantum-mechanical representations of singular bits of information, preserved in two individually polarized states at the same time. The advantage of quantum computing over silicon-based computing lie in the quantum principles of functioning. While a single bit in present-day silicon computing can function only in the binary state (i.e., 0 or 1), a qubit can simultaneously exist in a superposition of two states (0 and 1).

Hence, an arrangement of x number of qubits will give rise to 2x bits of information, thereby processing data and gathering information at a much faster pace than any algorithm yet designed for the fastest multi-core processor. The quantum nature of this technology is far from the brute power increasing transistor rates, that have indeed made clock cycles faster, but now reached the horizontal slope of the developmental S-curve. Although quantum computing is yet to be finely developed, it is the future, and a need for faster computing alternative, that diverts technology away from Moore’s half-century-old prediction.

Everything you need to know about Quantum computing:

The End

Present developments have come with indications that the future needs an alternative to silicon computing. Holographic displays are being made real, so it might not be long until Samantha from Her becomes reality. While such technology is indeed lucrative, it needs more horsepower than the current breed of commercially available multi-core processors can deliver.

Although the inertia of gradual development with transistors and cores will continue for a few years, the world of technology is taking a turn away from adding cores and pumping up clocks to boost performance. The prediction that Moore had made back in 1965 has inspired innovators to power up chipsets to deca-core processors in 2015. And even if it does not remain valid in future, you still can’t deny its contribution towards shrinking large, box-shaped computers with chunky keys into to sleek, metal and glass touchscreen devices with many times more computing power than ever before.

Check out this panel discussion on the future of Moore's Law:

Note: We have deliberately left supercomputers out of the equation, as the Moore’s Law majorly pertains to technology that have powered devices in public sphere.