What is Thread Parallelism, and How Do I Put It to Use?

Many movies have explored the great things humans could do if only they had access to 100 percent of the brain’s cognitive powers. While the myth persists that humans only use 10 percent of their brains, the truth is that activity runs throughout the entire organ each day.1 In fact, while our brains make up a mere 3 percent of the body’s weight, they use 20 percent of the body’s energy.

Survey

SurveyIt’s an attractive notion to think that there’s vast untapped potential in each of us, and perhaps there is. Interestingly, the metaphor of dormant processing power can be applied when we talk about high-performance computing (HPC) and taking full advantage of the power of today’s hardware.

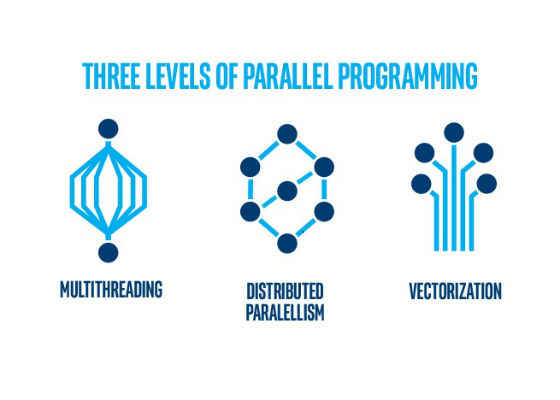

And now, if you’re reading this and thinking about the best way to use your modern HPC hardware to its full potential, consider updating your code. For superior performance and long-term sustainability, there are three levels of parallel programming to consider: multithreading, distributed parallelism, and vectorization.

Scale for today’s and tomorrow’s hardware

The ability to put multi-level parallel algorithms to work that take advantage of the parallel features available on modern hardware can help you effectively scale forward for today’s and tomorrow’s hardware.

“Efforts spent to modernize code,

with better parallelization, are clearly

an investment in the future.”

James Reinders, Director and Chief Evangelist, Intel

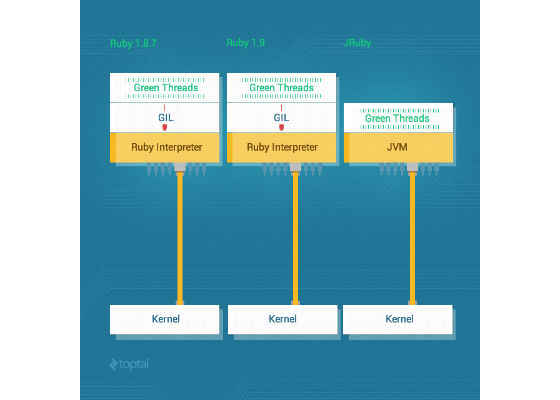

Improve software performance with multithreading

Multithreading (or thread parallelism) offers a good entry-level opportunity for developers to achieve improved software performance when using multi-core processors. With thread parallelization, you create and disseminate threads to cores—meaning that you build cooperating threads for a single process communicating via shared memory and working together on a larger task. Specifically, if you can optimize your code to have independent computations and make the data fit on a node, threading is a good way to speed up your execution and sustain that code over time.

An easy method is to simply add OpenMP* pragmas (assuming the code is written in C/C++ or Fortran*) to make those parts of the code run in parallel. The most common example of where this scheme can be applied is over loops with independent computations. With this approach, the program itself spawns threads of execution, which can be executed by the multiple cores on the system to run individually. And to share data between threads of execution, simply write into and read from the shared memory. (It’s worth noting, however, that this part of the process must be undertaken with care to ensure correct answers and to ward off the possibility of race conditions; for example, where more than one thread tries to access and change shared data at the same time.)

Distributed parallelism for large data sets on multiple machines

Threading is an easier and simpler starting point than distributed parallelism (or multinode optimization), where the same code runs independently on different machines and data sharing is accomplished via message passing. Using messages to share data becomes part of the algorithm design and must be carefully constructed to ensure nothing is lost and no process waits for a message that will never arrive. Distributed parallelism is valuable when your data sets are large and unable to fit on a single machine and the computations can be distributed to work on subsets of the data.

Vectorization for compute–intensive workloads

When you’re dealing with compute-intensive workloads, such as genomics applications or large numerical computations, consider using vectorization. That’s where identical computational instructions are performed on multiple pieces of data (known as SIMD, or single instruction, multiple data) within an individual core. This computation technique can increase the execution performance of a computation by a factor of two, four, eight, or more, depending on the size of the vector registers on the core. This boost can be realized even when the code makes use of threads.

Multithreaded visualization significantly increases the responsiveness of GUI application

CADEX Ltd. is successfully using multithreaded algorithms to increase performance on multi-core systems.

VIPO saves the day

Learn the best practices for vectorization from Robert Geva, Principal Engineer and Manager of Financial Services Engineering Group.

Get the tools and resources you need to build modern code

There’s more detail on how to develop multithreaded applications in Intel’s quick-reference guide.

Intel has even more resources available to help you build modern code to take advantage of each level of parallelism. This includes training, code samples, case studies, developer kits, access to hands-on webinars, and more. We’ll help you extract the value from your code and master a code strategy that takes advantage of Intel® based architecture. Learn more about the Intel Modern Code program.

For more such intel IoT resources and tools from Intel, please visit the Intel® Developer Zone

Source : https://software.intel.com/en-us/blogs/2016/02/17/what-is-thread-parallelism-and-how-do-i-put-it-to-use