Hands-On AI Part 21: Emotion-Based Music Transformation

A Tutorial Series for Software Developers, Data Scientists, and Data Center Managers

Survey

SurveyIn this article we present our ideas about emotional-based transformations in music. In the first part, theoretical aspects are presented, including score examples for each transformation. The second part consists of brief notes about the implementation process—tools used, challenges, and limitations that we faced.

Theory

There are two basic mediums of expression in music—pitch and rhythm. These mediums will be used as parameters to rewrite our melody in the selected mood.

In musical theory, when we speak about pitch in melody, we speak about relations between tones. A system of musical notes based on a pitch sequence is called scale. Intervals or a measure of each sequence step width can be different. This difference or its absence creates relations between tones and melodic tendencies, where stabilities and attractions are perceived as expressions of mood. In western musical tradition, when it comes to a simple diatonic scale, the position of a tone on a scale, relative to the first note, is called a scale degree (I-II-III-IV-V-VI-VII). According to stabilities and attractions, scale degree provides a tone with its function in a system. This makes the scale degree concept very useful in analysis of simple melodic pattern and in coding it with the possibility of assigning different values.

On the primary level, we have to choose artistically suitable scales to create any particular mood. So, if we need to change the mood of a melody, we have to investigate its functional structure using the described scale concept and then assign new values to the existing scale degree pattern. Like a map, this pattern also contains information about directions and periods in a melody.

For every particular mood, we will use some extra parameters to make our new melodies more expressive, harmonica, and meaningful.

Rhythm is a way to organize sounds in time. It includes such information as order in which tones appear, their relative length, pauses between them, and accents. This is how periods are created to structure musical time. If we need to save an original melodic pattern, we need to save its rhythmical structure. Only a few parameters in rhythm are needed to be changed—length of notes and pauses—but still, this would be right enough artistically. Also, to make our rewritten melody more expressive, we can take into account several extra ways to accent the mood.

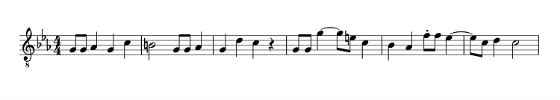

ANXIETY could be expressed through the minor scale and more energetic rhythm. Our original scale degree pattern is V-V-VI-V-I-VII V-V-VI-V-II-I V-V-V(upper)-III-I-VII-VI IV-IV-III-I-II-I.

Figure 1: Original pattern.

Original melody is written in a major scale that differs from minor by three notes—in the major scale III, VI, and VII degrees are major (high), and in the minor scale they are a half-tone lower. So if we need to change the scale, we just need to replace high degrees with low. But to create a more specific effect of anxiety, we need to keep our VII degree major (high)—this will increase the instability of this tone and sharpen our scale in a specific way.

To make rhythm more energetic, we can create a syncopation, or an off-beat interruption of the regular flow of the rhythm by changing the position of some notes. In this case, we will move several similar notes one beat further.

Figure 2: ANXIETY transformation.

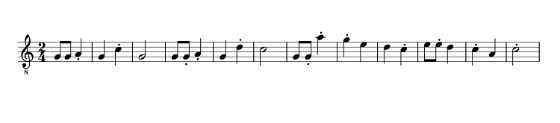

SADNESS can also be expressed through the simple minor scale, but should be rhythmically calm. So we replace major degrees by minor, including VII. To make rhythm calmer, we need to fill in pauses by extending the length of notes before them.

Figure 3: SADNESS transformation.

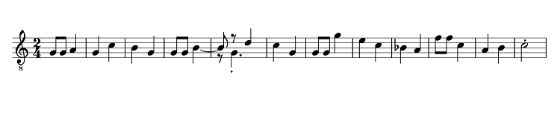

To express AWE, we should avoid determined and strict intonations—this will be our principle of converting. As you can see, according to the difference in sequence, steps degrees have different meaning and destination from the first (I) degree; it creates their meaning. So movement from the IV to the I degree is very direct because of its function. Intonation from the V to the I also sounds very direct. We will avoid those two intonations to create an effect of space and generality.

So in every piece of the pattern, where the V goes to the I, the IV goes to the I, or vice versa, we replace one of these notes or both with other closest degrees. Rhythm can be changed the same way as in the creation of SADNESS effect—just by slowing down the tempo.

Figure 4: AWE transformation.

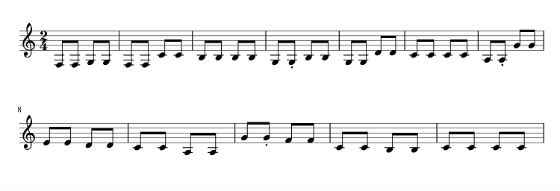

DETERMINATION is about powerful movement, so the simplest way to show it is to change rhythm the same way as we did for ANXIETY, but we also need to cut the length of all notes except for every last note of every period, V-V-VI-V-I-VII V-V-VI-V-II-I.

Figure 5: DETERMINATION transformation.

Major scale sounds positive and joyfully as such, but to accent and express HAPPINESS/JOY we use a major pentatonic scale. It consists of the same steps except with two degrees—the fourth and the seventh, I-II-III-V-VI.

So every time we meet those two degrees in our pattern, we replace them with the closest ones. To accent the simplicity that our scale provides, we use a descending melodic piece consisting of five or more notes as a place to represent our scale, step-by-step.

Figure 6: JOY transformation.

TRANQUILITY/SERENITY can be expressed not just by changing scale, but through reorganizing melodic movement. For this, we need to analyze the original pattern and define similar segments. The first note of every segment defines the harmonical context of the phrase; that is why we need those most of all: V-V-VI-V-I-VII V-V-VI-V-II-I V-V-V-III-I-VII-VI IV-IV-III-I-II-I.

For the first segment we should use only these degrees, IV-V-I-VII; for the second, V-VII-II-I; the third, VI-VII-III-II; and the fourth, VII-IV-I-VII.

These sets of possible degrees are actually another type of musical structure, chords. But still, we can use them as a system to reorganize a melody. Degrees can be replaced by the closest ones from these prescribed chord patterns. If the whole segment starts from a lower tone than it was originally, we need to replace all degrees with lower ones available from the prescribed pattern. Also, to create a delay effect, we need to split the length of every note on eighth notes, and gradually decrease the velocity of those new eighth notes.

Figure 7: TRANQUILITY/SERENITY transformation.

To accent GRATITUDE, we need to use stylistic reference in rhythm, creating an effect of arpeggio; we will come back to the first note at the end of every segment (phrase). We need to cut a half-length of every last note in the segment and place there the first one of this segment.

Figure 8: GRATITUDE transformation.

Practice

Python* and music21* toolkit

The transformational script was implemented by using Python* and the music21* toolkit 1.

Music21 presents a very flexible and high-level class for manipulations with musical conceptions such as notes, measure, chord, scale, and so on. It makes it possible to operate directly in the domain area, compared to low-level manipulations with a raw musical instrument digital interface (MIDI) data from a file. However, direct work with MIDI files in music21 isn’t always suitable, especially when it comes to score visualization 2. Therefore, for needs of visualization and algorithm implementation, a more convenient way is to convert source MIDI files to musicXML* 3. Moreover, the musicXML format is an input format for BachBot*, which is the next stage in our processing chain.

Conversion could be performed via Musescore* 4:

- for musicXML out:

musescore input.mid -o output.xml

- for MIDI out:

musescore input.mid -o output.mid

Jupyter*

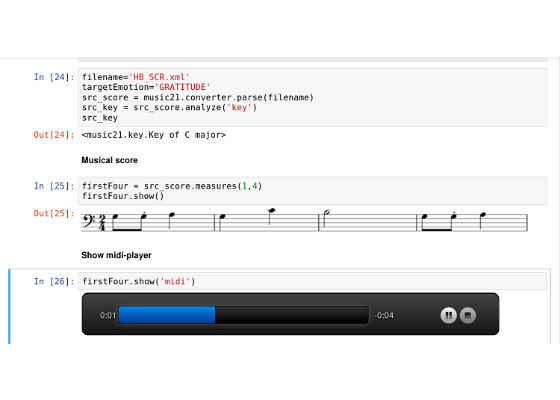

The music21 toolkit is well integrated with Jupyter* 5. As well, integration with Musescore enables showing the score right in the Jupyter notebook and listening to the results via an integrated player during the development and experimentation process.

Figure 9: Jupyter* notebook with code, score, and player.

The Score Show feature is especially useful for the teamwork of the programmer and musician-theoretician. The combination of Jupyter's interactive nature, domain-specific representation of music21, and simplicity of the Python language makes this workflow especially promising for this kind of сross-disciplinary research.

Implementation

Transformational script was implemented as a Python module, thus it enables performing a direct call:

python3 emotransform.py –emotion JOY input.mid

Or, via an external script (or Jupyter):

from emotransform import transform transform('input.mid','JOY')

In both cases the result is an emotion-modulated file.

Transformations, related with notes degree changes—ANXIETY, SADNESS, AWE, JOY—are based on using the music21.Note.transpose function in combination with analysis of current and target note degree position. Here we use the music21.scale module and its functions for building the needed scale from any root note 6. For retrieving the root note of a particular melody we can use the function analyze('key') from the music21.Stream module 7.

Phrase-based transformations—DETERMINATION, GRATITUDE, TRANQUILITY/SERENITY—are required to be additionally researched. The research will allow us to detect the beginning and the ending of phrases strictly.

Conclusion

In this article we presented the core idea behind emotion-based music transformation—manipulation with position of a particular note on a scale relative to the tonic (note degree), piece tempo, and musical phrase. The idea was implemented as Python script. However, theoretical ideas are not always easy to implement in the real world, so we met some challenges and possible directions for future research. This research mostly related to musical phrase detection and its transformations. The right choice of tools (music21) and research in the field of music information retrieval is key for resolving such tasks.

Emotion-based transformation is the first stage in our music processing chain; the next one is feeding transformed and prepared melody to BachBot.

For more such intel IoT resources and tools from Intel, please visit the Intel® Developer Zone

Source:https://software.intel.com/en-us/articles/hands-on-ai-part-21-emotion-based-music-transformation