Track Reconstruction with Deep Learning at the CERN CMS Experiment

Connecting the Dots

Survey

SurveyAs part of the CERN openlab summer school I am participating in the Intel Modern Code Developer Challenge. The scope of this challenge is to write a couple of technical posts about our projects at CERN to share part of our work with the Intel developer community.

In the following weeks I will describe in detail the results of my project, including the software and hardware used. This first post is a general overview to describe the context and the purpose of the project.

CERN

The european center for nuclear research (CERN) is located in Geneva, Switzerland, where the LHC(Large Hadron Collider), the biggest particle accelerator in the world, is built in a 27 km tunnel built 100 m underground . Inside the accelerator two proton beams are accelerated through various stages in opposite direction until they reach a velocity close to the speed of light and they collide with a center of mass energy of 13 TeV. From these collisions new particles are generated that are recorded with particle detctors and their properties are studied by the phisicists to unveil new particles or better understand their properties. There are four main experiments at the LHC but I will talk only about CMS, which is the one where I am working on.

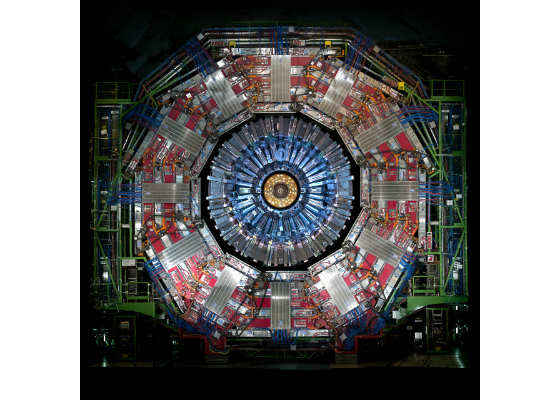

CMS

The Compact Muon Solenoid (CMS) is a general purpose detector used to detect particles generated by proton-proton collisions. It is composed of many cilindrical layers. The innermost part is the silicon tracker, divided in two subdetectors, the pixel tracker and the strip tracker. It is designed to identify the particle’s trajectories with high precision and it is made entirely of silicon to endure the high level of radiation inside the detector. The internal subdetector, the pixel tracker, is made of small square sensors while the external subdetector, the strip tracker, is made of rectangular strips. Both sensors are capable to detect charged particle. After that we have the calorimeter, used to detect the particle’s energy. The next layer is the superconducting solenoid, which generates a 4T magnetic field. The magnetic field is used to bend the particles to allow to measure charge and momentum and the mass of each particle. Finally, the last layers of the detector are the muon detectors, and they are used to recognize muons.

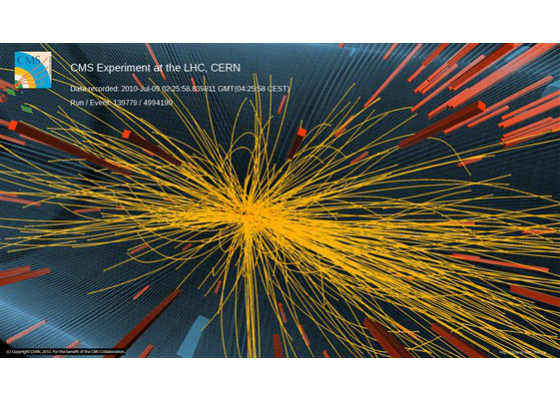

Each detector generates a huge amount of data. A new collision happen every 25 ns and the detector record about 1 PB/s of data from the collisions. This data are filtered because they cannot be entirely stored in persistent memory. Fortunately only a small fraction of the events are interesting for analysis. A first filter, called the L1 trigger, select events based on simple signatures, for example the presence of high-energy particles. This first coarse filter reduces the rate of events to 1 MHz. The next step is the HLT (High Level Trigger) that performs more complex analysis to further reduce the number of events to about 1 kHz.

Track Reconstruction

To select the interesting events in the HLT it is necessary to reconstruct the trajectory of each particle and in order to do that the raw data from the silicon tracker is processed to recover the trajectories.

The silicon tracker is made of several cilindrical layers centered around the interaction region. The first four layers of the tracker are part of the pixel tracker and are made of milions of pixel channels that emit electrons when a charged particle traverse them. This electrons are read with fast electronics in the detector and sent to the L1 trigger.

In the HLT raw data from the detector is processed to obtain hit clusters, which are formed by nearby pixels which have an ADC value greater than zero. The cluster shape depends both on the particle, on its trajectory and on the module that has been hit.

Track reconstruction by its nature is a combinatorial problem because given a partial track formed by hits found in the internal layers there could be multiple hits compatible with the current track estimation and they must be checked because we don’t want to lose any track during this process. For this reason this reconstruction takes most of the CPU time.

It is implemented as an iterative algorithm where each iteration apply the following steps:

• seed generation

• track finding

• track fitting

• track selection

In the seed generation track seed are created from hits found in the internal layers of the detector. A set of parameters determines the compatible hits that can form a track, like the set of compatible layers or the window in each layer to use to find the hits. The seeds found in the first step are used for the track finding, which looks for other hits in the outer layers. After all the hits have been associated to the track the track fitting determines the parameters of the trajectory. The last step of the iteration is track selection, which is necessary because the previous steps could generate fake tracks. This steps looks for signals that denotes fake particles, like a large number of missing hits. Note that missing hits could be caused by different reason, like broken pixels or a region not covered by sensors (e.g. the region between two different modules).

The previous steps are repeated in an iterative fashion, each time with different parameters for the seeding phase. Using this method it is possible to search for easy tracks first, eliminate from the successive searches the hits associated with the found tracks, and look for the more difficult tracks in the successive steps with a less dense environment.

Pileup

The main problem of this approach is the huge number of fake track generated during the seed generation and track finding. This is worsened by the fact that multiple collisions happens at each bunch crossing, a phenomenon called pileup (PU). Today about 25 collisions happen at each bunch crossing. In 2025 the HL-LHC (High Luminosity LHC) will become active and it will produce 10 times more data, with a pileup of 250. This means that the number of hits will increase accordingly and the number of fake tracks will exponentially explode.

The current algorithms and hardware are not capable of handling that amount of data and must be improved to overcome this upcoming challenge.

One of the most promising solution is the parallelization of the algorithms. This approach requires a complete redesign of the algorithms, which must be adapted to support vectorization or execution on specialized hardware, such as GPUs and FPGAs.

Doublet filtering

The objective of my project is to reduce the number of fake tracks as soon as possible to avoid to perform useless computation afterwards. To achieve this goal we are trying to filter out the bad track seeds, those which are not part of a real track. In particular we are developing a machine learning model based on convolutional neural networks which will take as input two hit clusters as well as some additional information about them like their global coordinates and some ID to identify the exact module inside the detector where the hit resides and outputs the probability that the two hits correspond to a real track. This model can be used after the first step of the seed generation step, which generate a pair of hits from the internal layers, which from now on we will call doublet.

A good model will need to satisfy these two requirements:

• It should be precise. In practice we want to keep at least 99% of the true tracks

• It should be fast. The model must be capable to filter the doublets in real time

Both requirements are hard constraints that cannot be reduced. If we lose too many seeds we reduce the quality of the data, undermining all the subsequent analysis that will be performed offline with the stored data.

On the other side, if the inference is too slow the model cannot be used during the data collection and it is useless.

To tackle this problem we are looking at different solutions, both on the software side and on the hardware side.

On the software side, we are trying different frameworks, like Caffe*, TensorFlow* and neon™.

There are also specific network, like binarized neural network, which are designed to be much faster than standard neural network but require specialized implementation to work with lower resolution in an efficient manner.

On the hardware side we are trying different hardware solutions, like manycore, GPUs and FPGAs. In particular we plan to do extensive benchmarking with the Intel® Xeon Phi™ processor and Intel's new FPGA architecture specialized for machine learning inference.

For more such intel IoT resources and tools from Intel, please visit the Intel® Developer Zone

Source:https://software.intel.com/en-us/blogs/2017/08/17/track-reconstruction-with-deep-learning-at-the-cern-cms-experiment