How Intel Xeon Phi Processors Benefit Machine Learning/Deep Learning Apps and Frameworks

Machine learning (ML) is quickly coming of age. Today, we’re able to feed very large amounts of data to machine learning applications capable of learning to predict possible outcomes with a high degree of accuracy. The accuracy of these deep learning (DL) models increases proportionally with the size of the training dataset. As trillions of connected devices send data to the system, datasets can reach into the hundreds of terabytes.

Survey

SurveyWe’re seeing the results of this machine learning evolution in the form of self-driving cars, real-time fraud detection, social networks that recognize who’s in your vacation photos … The list is long and promises to include every industry.

Let’s lift the hood and see what makes this new Intel Xeon Phi product family so well suited to handling ML workloads. I’ll also share some of our early performance test results when running ML workloads on a single-node Intel Xeon Phi processor-based system and on a 128-node Intel Xeon Phi processor-based cluster. Finally, I’ll talk about the work we’ve been doing to optimize our software libraries and several popular open-source ML frameworks for x86 architectures.

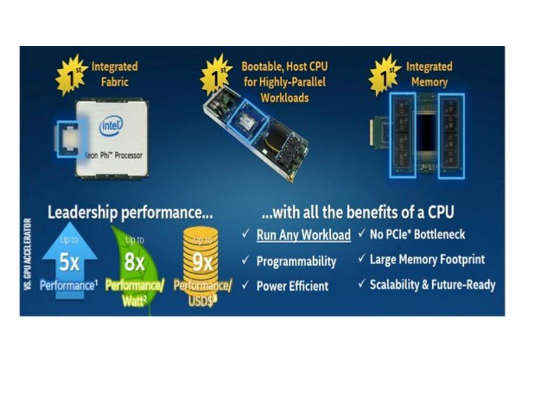

Intel® Xeon Phi™ Processor Features

In designing the second-generation Intel Xeon Phi chip, we created a massively multicore processor that is available in a self-boot socket. This eliminates the need to run an OS on a separate host and pass data across a PCIe* slot. (However, for those who prefer using the latest Intel Xeon Phi chip as a co-processor, a PCIe-card-version will be available shortly.)

The Intel Xeon Phi Processor x200 has 72 processor cores, each with two Intel® Advanced Vector Extensions 512 (Intel® AVX-512) SIMD processing units for improved per-core floating-point performance. This benefits popular ML algorithms such as floating-point multiply and Fused Multiply-Add (FMA). The Intel Xeon Phi Processor x200 delivers up to 6 teraflops of compute power. That multicore, multithreaded power is coupled with an on-package, high-bandwidth, memory subsystem (Multi-Channel DRAM) and integrated fabric technology called Intel® Omni-Path Architecture (Intel® OPA).

The high-bandwidth integrated memory (up to 16 GB of MCDRAM) helps feed data to the cores very quickly and supplements platform memory of up to 384 GB of commodity DDR4. This lets programmers manage memory by specifying how much data they want and when they want it. MCDRAM also provides flexibility to those who prefer to cache their data so they don’t have to think about memory management. (MCDRAM can be configured as a third-level cache, as non-uniform memory access—allocatable—memory, and in a hybrid combination that’s part cache, part memory.)

When processing large ML/DL workloads, the ability to scale from one node to hundreds or thousands of nodes is crucial. With integrated Intel OPA fabric, the Intel Xeon Phi Processor x200 can scale in near-linear fashion across cores and threads. On a coding level, the fabric makes it possible to efficiently fetch data from remote storage to a local cache with minimal programming and at high speed.

Taken together, these innovations deliver very good machine learning and deep learning time-to-train. For example, on AlexNet, with respect to single-node, we achieve about 50x reduction in training time on 128 nodes of the Intel Xeon Phi Processor x200. For GoogLeNet training, we achieve a scaling efficiency of 87% at 32 nodes of the Intel Xeon Phi Processor x200, 38% better than the best such published data to-date.

The tradeoff is that your apps must be parallelized to take advantage of this massively parallel multicore, multithreaded architecture. Otherwise, you must be willing to accept single-core, single-thread performance.

One factor that offsets single-core, single-thread performance is the fact that each core in an Intel Xeon Phi Processor x200 has multiple vector processing units, so overall you have more compute density. As a result, if your workload can benefit from high levels of parallelism and thread parallelism, the Intel Xeon Phi processor packs more compute into a smaller area and, therefore, draws less power than alternative solutions.

Binary Compatibility

From a software perspective, second-generation Intel Xeon Phi processors are binary compatible with x86 architecture processors including the Intel Xeon® E5 family of processors. That means you only have to modernize your code once to boost your time to train on second-generation Intel Xeon Phi processors and your existing Intel Xeon processor-based servers. By “modernization” I don’t mean that you have to write ninja parallel code. We’re making it easier to parallelize ML/DL code for general-purpose x86 architecture-based CPUs with tools like the popular Intel® Math Kernel Library, which includes new extensions for optimizing deep neural networks in the Intel® MKL 2017 Beta release that’s available now. In addition, we’re hard at work optimizing for x86 architectures on popular open-source ML frameworks such as Caffe* and Theano*. These and other efforts, even without hardware upgrades, are producing performance boosts on the order of 30 times for DL applications.

For more such intel Modern Code and tools from Intel, please visit the Intel® Modern Code

Source:https://software.intel.com/en-us/blogs/2016/06/20/how-xeon-phi-processors-benefit-machine-and-deep-learning-apps-frameworks