Cash Recognition for the Visually Impaired Using Deep Learning

Cash Recognition for Visually Impaired is a project dedicated towards blind people living in Nepal. Unlike many countries Nepalese monetary notes do not have special marking in them to help blind people. I’ve wanted to address this problem for a very long time by coming up with a solution that is realistic in nature and easy to use in everyday life.

Survey

SurveyPreviously, people have tried to solve this problem using techniques such as optical character recognition (OCR), image processing, etc.

While they all seem promising, they could not perform well in real life scenarios. Using such techniques requires images of the currency notes with a very high resolution and proper lighting conditions, which we know from experience is not always possible.

If we imagine the use case, let’s say a blind person is travelling on a local bus or taxi and he requires to know what note he is giving to the driver. This requires him to ask what note he has right now in his hand and depend upon the honesty of the driver. Obviously, this approach has many flaws. It makes the blind person dependent on the response of the driver, which we know cannot always be trustworthy and he could easily fall victim to fraud in such a case. This not only applies to travel, but to the supermarket, retail stores, and any place monetary transactions occur.

In order to solve this problem, we require a solution that will work anywhere and does not require perfect picture quality for classification of such monetary notes. This is where deep learning comes in.

The goal of this project is to come up with a solution via a smart phone app, both on Android and iOS, which will leverage the power of deep learning image classification behind the scenes and help the blind individual to recognize the monetary notes accurately and independently so he does not have to depend on others and can easily deal with monetary transactions on his own. The app will recognize the note he is carrying and play a sound in Nepali or English, depending upon the configuration, and let him know the value of the recognized note.

Approach

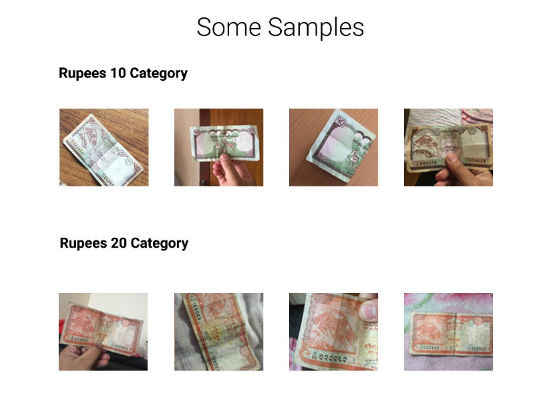

In order to make a solution that will work on realistic images taken from smartphone devices, we would require the deep neural networks to be trained on such images. So, to start I took pictures of the notes of two currency categories only: Rs.10 notes and Rs.20 notes. That way I would quickly know if the approach I am taking is right or not. I gathered about 200 pictures from each category.

After collecting the data of these two categories, now I would require a proper model to train these data on.

Transfer Learning

One of the most popular and useful techniques used today in deep learning is called transfer learning. Typically for training a deep neural network, we would require a large dataset of images for the task which I am currently implementing. But thanks to this technique we only require small number of datasets. What we do is take a model that is already trained on a huge dataset and leverage its learned weights to re-train on the small dataset that we have. That way we will not require large dataset and the model will predict correctly as well.

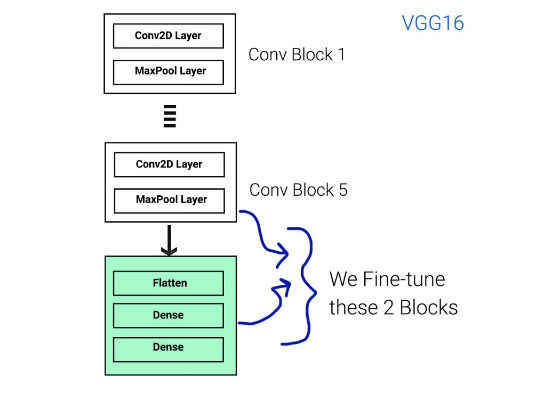

At this point I am only checking and verifying my approach, so I used VGG16 model pre-trained on ImageNet dataset with 1000 categories. I used a fine-tuning technique on VGG16 so that I only need to re-train the last layer of this model to get the desired accuracy.

I trained my fine-tuned model with following configurations for the first training:

I used Keras with Tensorflow backend for the code and ran the training.

After training the model the validation accuracy is about 97.5% whereas the training accuracy is 98.6%.

From this result, we know that my model is slightly over-fitted and in-order to minimize that I would require more data and might have to introduce regularizations in my model as well. Which will be my next steps.

The App

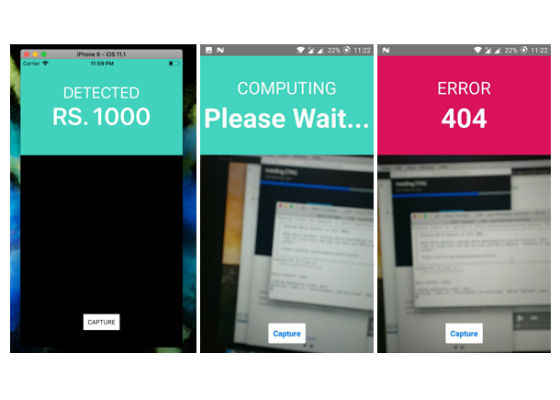

In order to interact with a prototype via RESTful API for now, I have developed an MVP version of the app using React Native. Some of the pictures of this app are pictured here:

For now, the two category version of the app will interact via REST API and compute on the server machine for the recognition and prediction part. The response via JSON format will be sent to the client app after preduction and the label display and sound play will be entirely done on client side of the app.

Next Steps

Now that I have successfully tested my approach and it holds feasible for the task I’m trying to solve, the next steps would be to collect data for all 7 categories of standard currency notes in Nepal.

For this project to be successful and effective I require at least 500 images for each category.

Further, after training the model I will have to create an app and embed the model offline so that a blind person using this app won’t have to connect to the internet every time they need to recognize the notes they are carrying.

Both of these tasks will require dedicated time and resources in order implement it properly. But after successfully testing my approach on the first two categories, I am hopeful that I can successfully solve this problem using deep learning.

For more such intel IoT resources and tools from Intel, please visit the Intel® Developer Zone

Source: https://software.intel.com/en-us/blogs/2017/11/21/cash-recognition-for-the-visually-impaired-using-deep-learning