GeForce GTX 980 unveiling, Dynamic Super Resolution, MFAA and other updates from NVIDIA’s Editor’s Day conference

After a long wait the embargo has finally been lifted and we can now freely talk about the NVIDIA GeForce GTX 980, its pricing, and the new technologies that now form a part of the Maxwell architecture.

Editors Day, NVIDIA's annual conference might be a misnomer considering the event is actually held over two days; still it doesn't take away from the fact that it's a celebration of gaming both from the development aspect as well as pure hardware innovation. This time around the conference was held in beautiful Monterey Bay, California and it was an event that would make any gaming enthusiast proud to be a part of the PC Master Race.

The biggest reveal of course was the GTX 980, NVIDIA’s top end GPU based on the Maxwell GM204 chip. Priced at Rs. 46,000, it is currently the fastest single GPU card we’ve ever tested. Hop over to our detailed NVIDIA GeForce GTX 980 review or continue reading about the exciting developments showcased at the event below.

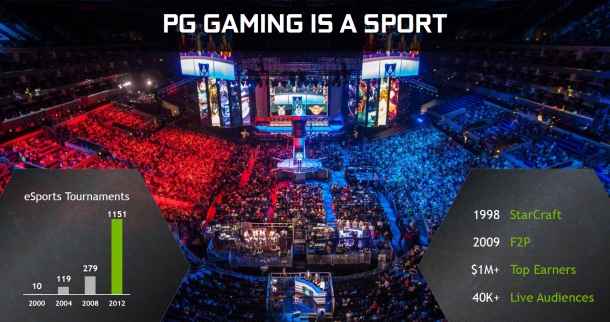

Day 1 of the conference began with Jeff Fisher, SVP, GeForce Business Unit, taking the stage and talking about how far PC gaming and eSports has come over the years. “Even if ESPN disagrees, eSports is a sport in its right, and millions of people all over the world tune in to watch it,” he said.

We couldn’t agree more considering the numbers Twitch.tv has been generating lately (and their buyout by Amazon for a whopping $1 billion). Latest reports indicate the live streaming site got 55M Monthly viewers in July 2014 which is a 4x growth year-on-year. Just like EPL and IPL games, eSports or viewing (and not just playing) games is a growing entertainment medium.

Dynamic Super Resolution (DSR)

Next up Tom Petersen took the stage and unveiled one of the biggest features of Maxwell – Dynamic Super Resolution or DSR. If you are familiar with the process of supersampling (used in spatial anti-aliasing), or the method of downsampling, where the GPU renders games at higher resolutions, and then scales the image down to the monitor’s native resolution, DSR should be easy to understand because essentially it’s the same thing.

What used to be a hack earlier, thanks to DSR – perhaps for the first time ever – comes as a feature built into the driver itself. It lets gamers who don’t have 4K or UHD displays (yet) to utilize the extra horsepower of their GPUs to up the gaming experience on their 1080p monitors. Unlike downsampling which sometimes can generate artifacts on textures when certain post-processing effects are applied, Dynamic Super Resolution uses a 13-tap Gaussian filter during the conversion process (downscaling) and is supposed to reduce or eliminate the aliasing artifacts experienced with plain downsampling, which relies on a simpler box filter.

DSR as Tom explained is especially useful for games like Dark Souls which will not scale too much when more GPU power is thrown at them. With good cards you’ll be able to max out all settings pretty quickly and still get more than playable framerates. This is where DSR can be useful.

The reason DSR does help get rid of or at least reduce texture popping, jaggies or what is sometimes know as scintillating textures, is pretty logical and straightforward to understand. Look at the image below:

Think of the diagonal lines as blades of grass being rendered. The image on the left can be clearly seen as sampled with a relatively lose pixel grid. While the one on right has a more closely packed grid. The resultant render when passed through the gaussian filter looks something like this:

You’ll see that in the alogrithmic decision of whether to shade or not share a pixel the right one hits more spots with an affermative answer.

Of course DSR is not without flaw – you’ll still have issues sometimes with in-game menus looking smaller. So UI scaling will be an issue at times. Ah well. Can’t have everything that one’s heart desires, can we now?

There’s also a bit of confusion on whether this feature will be available on older, non-Maxwell based GPUs. In the Q&A session following his presentation on this topic, Tom said that it’s currently launching only on Maxwell but a rollout over other product lines is likely. The GTX 980 whitepaper however says, “While it’s compatible with all GeForce GPUs, the best performance can be seen when using a GeForce GTX 980. Going forward we could potentially use Maxwell’s more advanced sampling control features, like programmable sample positions and interleaved sampling, to further improve Dynamic Super Resolution for owners of GM2xx GPUs.”

To see DSR in action watch our highlights video below and skip to the 4 min 18 secs mark. If you want you could also choose to watch the entire video which begins with me practicing facial gymnastics in front of a badly done greenscreen. Moving on…

MFAA

The next big piece of technology announced was closely related to DSR. While DSR tries to optimise games for scaling in the visual domain MFAA tries to better the performance aspect. In a nutshell, it gives the user the same experience as 4X MSAA at the compute cost of 2X MSAA. How does it achieve that? Well that's a little complicated. Put on your Portal face and read on.

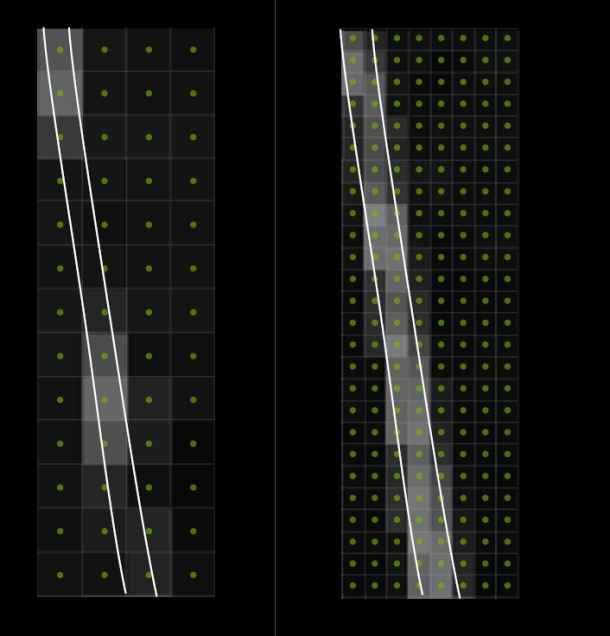

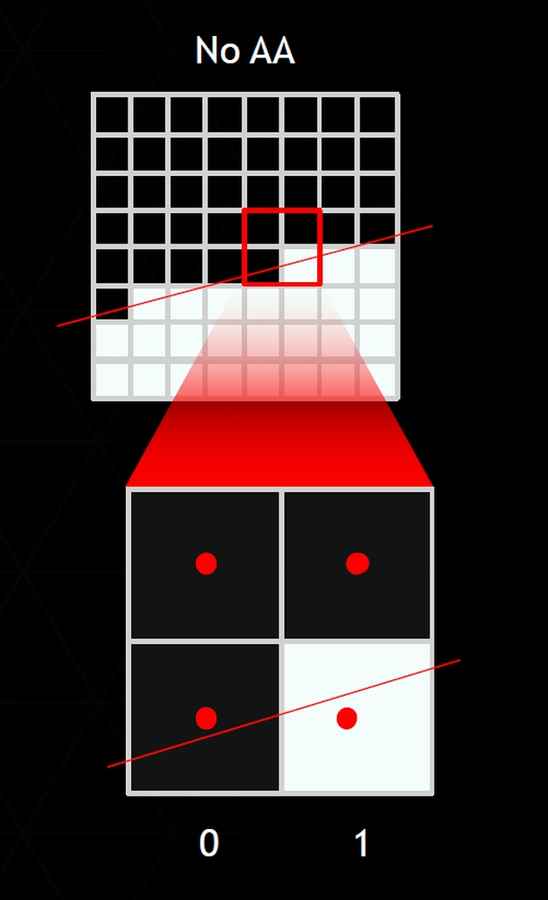

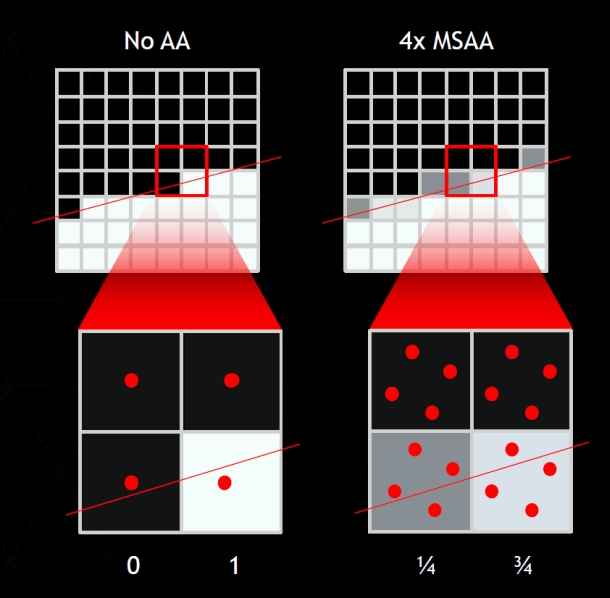

MFAA stands for Multi Frame-sampled Anti Aliasing and is a flexible sampling method available to Maxwell GPUs that tries to push AA quality and efficiency up a notch. MFAA alternates AA sample patterns both temporally and spatially to produce the best image quality. Let’s try to understand this with a couple of simplified imaged. See this jagged line being rendered?

The pixel grid for that line looks something like that when magnified. With no AA whether the pixel gets shaded or not is a binary decision – if the line is above the center of the pixel it get’s shaded, if it is below it does not. As shown in the image below:

Now what happens with 4x MSAA? The shading is dependent on coverage. So three dots on top one below gets 1/4th coverage, three below and one on top gets 3/4th and is hence shaded darker. Like so:

What MFAA does is it algorithmically flips or mirrors samples of pixel coverage and combines by applying a temporal synthesis filter to create a coverage pattern identical to 4x MFAA. This saves on hardware compute cost. Have a look at the image below:

VXGI

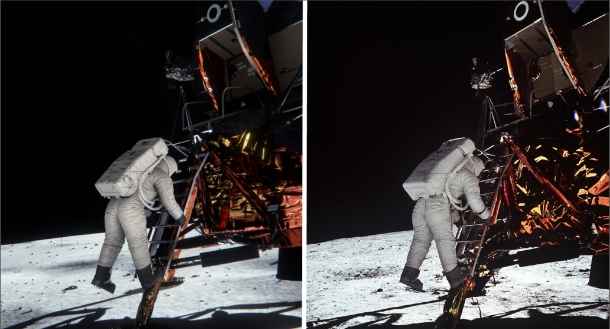

NVIDIA is confident Voxel Global Illumination is a huge step towards the holy grail of real time computer graphics – path tracing. It is known that rendered scenes start to look closer and closer to reality when GPUs begin to process those millions of light rays, millions of bounces and resultant energy losses in the intensity of light in a scene – all in real time. A tall order indeed. To circumvent this game designers use many techniques such as pre-baking textures or ambient occlusion (commonly referred to as the poor man’s global illumination). With Voxelization NVIDIA hopes to bring in game lighting one more step closer to this dream of “true” path tracing. To highlight this milestone the company took a rather novel approach. It took on one of the most controversial topics in the repertoire of conspiracies – the supposed faking of the the moon landing – and decided to once and for all disprove the whackjobs by artificially creating that entire moon landing scene in real time using UE4. NVIDIA engineers took the iconic photograph Buzz Aldrin stepping off the lunar lander and recreated everything right down to the materials, landscape and even bits of duct tape used on the lander. They achieved an almost photo realistic effect except something wasn’t quite right. After much experimentation they realised they hadn’t accounted for the light bouncing off the person who took the picture – Neil Armstrong. Take a look at the screenshot and actual photograph below. Can you tell which is which?

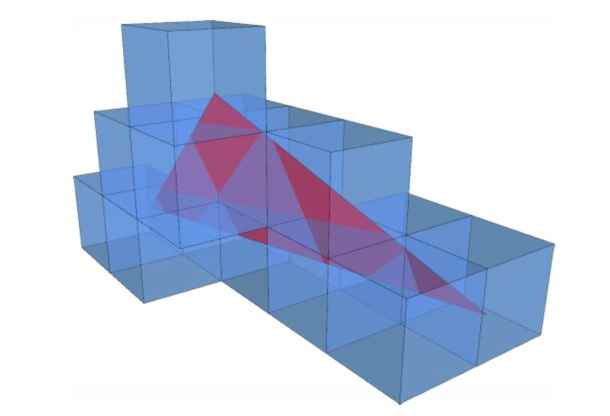

Coming back to the VXGI, the simplest way to explain the process would be this:

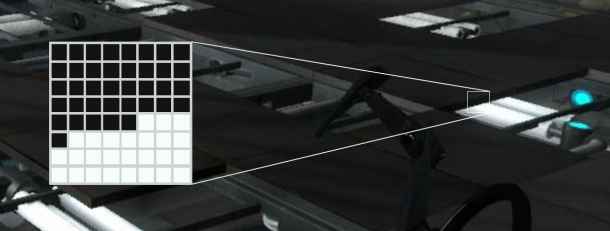

Begin by converting an entire scene into voxels (three dimensional pixel). On a smaller scale we can show this applied to a single triangle as in the image below:

Each cube that the triangle touches has be represented here. And it is the job of each voxel (or cube) to store two types of information – how much of the voxel is occupied by the actual object and the intensity and direction of light being bounced off that part of the object. This light that is bouncing off is calculated using a new method of tracing called cone tracing. It’s more like an approximation or “big picture” approach to replicating diffused light. But it does result in very realistic approximations of global illumination at a much lower computational cost than standard ray tracing.

VR Direct

Latency is a near killer when it comes to Virtual Reality. In fact it is one of the prime causes of motion sickness associated with any Virtual Reality equipment. To put it simply, the disconnect or lag between you moving your head and the scene being rendered in front of your eyes causes your brain to go “wut?” and behave erratically. VR Direct is an attempt by NVIDIA to bring down this latency in order to truly take VR mainstream; the first and most important step of this would be to up the resolution for current VR implementations to at least 4K. When the screen is so close to your eye, one megapixel per eye is just not enough. For this the latency between SLI (of course you’ll need two of these powerplants) should be zero or near zero.

Using things like MFAA and Asynchronous Ward, VR Direct has managed to bring down latency in the compute stack to 25 ms. The other interesting feature of VR Direct is Auto Stereo which is supposed to allow you to play games that weren’t designed for VR in VR!

Day 2 of Editor’s Day was split down the middle between explaining the Maxwell architecture and the developer updates to NVIDIA’s GameWorks platform. There were also few speakers from prominent game studios such as Eve Online CTO Halldor Fannar and a couple of folks from Ubisoft who showed the audience a work-in-progress build of Assassin's Creed Unity. The tessellation on the new AC looked remarkable. Unfortunately we weren't’ allowed to record the demo. Also noteworthy was the the Eve Online Valkyrie demo on Oculus. Mind blowing to say the least, it had me doing multiple barrel rolls just to see if I got sick. I’m happy to report that I didn’t.

Max McCullen, Principal Development Lead for Direct3D gave much awaited details about DX12 features including Rasterizer Ordered Views, Typed UAV Load, Volume Tiled Resources and Conservative Raster. There was also mention of increased scalability across multiple CPU cores in the API. Woohoo! Err… Finally.

Updates to Gameworks

Some of the never seen before updates to GameWorks included turf effects added to rendering of grass to produce realistic effects. Each blade of grass now has geometry which means it can interact with other objects. So for example if a sniper has bent over some grass where he was camping the patch of bent grass would be seen and remain persistent. Check out the video below to get a better idea:

PhysX FleX added viscosity to what was already available. This lead to a rather squeamish demo where one and only one thing was in everyone’s mind – a particular application of this tech which we will not mention but you can easily decipher for yourself by watching the below demo:

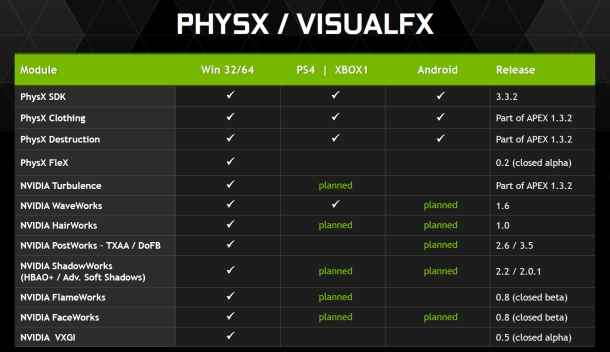

An interesting update was that some of the features of PhysX / Visual FX would be ported to other platforms such as PS4, Xbox One and Android in the future. Most of the cross portable effects rendered on PhysX are CPU intensive. Initially NVIDIA said they did it on Cuda. Now they're doing it on DirectCompute. So any platform that supports DirectCompute would be extended this repertoire of effects.

The chart below shows details:

What was missing…?

Although DSR, MFAA and the likes were noteworthy developments, there wasn’t anything as groundbreaking as G-Sync from last year’s Editor’s Day. Being gamers and geeks we always expect more and we would’ve liked to see some other stuff too. Maybe a sneak peak behind Valve’s iron curtain would’ve made the event so much more sweeter. Steam boxes were conspicuously missing from the event. Another small disappointment was Mr. Jen Hsun’s somewhat absence. Although he was seen interacting with journalists and pressing flesh all around, he didn’t do the keynote address or any of the big reveals. We missed his trademark leather jacket and his cheery on-stage flamboyance. Speaking of celebrities we didn’t have really big names like John Carmack this year. Who knows maybe they tried to rope in Gabe Newell and it fell through. That could explain the lack of Steam (forgive the pun, its been a long night).

Siddharth Parwatay

Siddharth a.k.a. staticsid is a bigger geek than he'd like to admit. Sometimes even to himself. View Full Profile