Microsoft’s new privacy policy clarifies that third party contractors listen to Cortana audio

Microsoft's Privacy Policy updated to reflect third-party access to audio

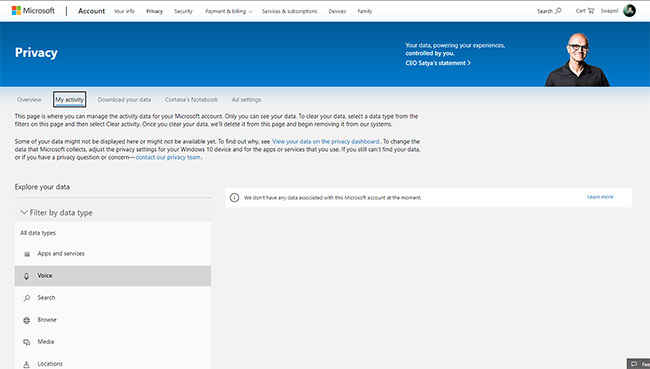

Find your recordings in the Privacy Dashboard

Voice-based assistants and smart speakers have been becoming exceedingly popular in the common consumer space. This gave rise to growing concerns about “companies listening in,” with companies assuring consumers that wasn’t the case. Turned out, that was the case. Then companies said that the random voice data was not being stored, which it turns out, was being stored. Now, the third blow in this space comes with the expose that third party humans have been listening to bits of those audio recordings. Facebook was caught doing it, as was Apple. Now Microsoft has come forward stating that it too allows third parties to listen in on some of your voice data.

The voice recordings in question come through any query made on Cortana, Microsoft’s own AI. Cortana is a part of every computer that currently runs Windows 10, and unless it has been specifically turned off, the AI is always listening for your commands. This voice data, Microsoft says, can sometimes be shared with third-party contractors, after it has been anonymized. Microsoft’s justifies this through a statement added to their Privacy Policy which reads, “To build, train, and improve the accuracy of our automated methods of processing (including AI), we manually review some of the predictions and inferences produced by the automated methods against the underlying data from which the predictions and inferences were made,” Microsoft says. “For example, we manually review short snippets of a small sampling of voice data we have taken steps to de-identify to improve our speech services, such as recognition and translation.”

While it is concerning that every laptop which runs Windows 10 potentially allows Microsoft to pick up snippets of conversations, all is not bleak. Microsoft does not offer a way to opt-out from having third parties listen to your audio, but you could turn off Cortana if you wished so. Additionally, you can visit the Microsoft Privacy Dashboard where you will find any and all the voice snippets that the company may have stored. Additionally, you will also find a transcript of the recordings based on what Microsoft or its third party think it to contain.

At this point, it is hardly any surprise that Microsoft is also having third parties audit snippets of audio files picked up from your Cortana queries. Amazon was caught doing the same thing, after they denied even storing audio recordings. Unfortunately, due to a lack of laws to govern how voice data is handled, consumers are left at the mercy of tech companies in terms of how they choose to protect consumer data.

Digit NewsDesk

Digit News Desk writes news stories across a range of topics. Getting you news updates on the latest in the world of tech. View Full Profile